Share

Table of contents:

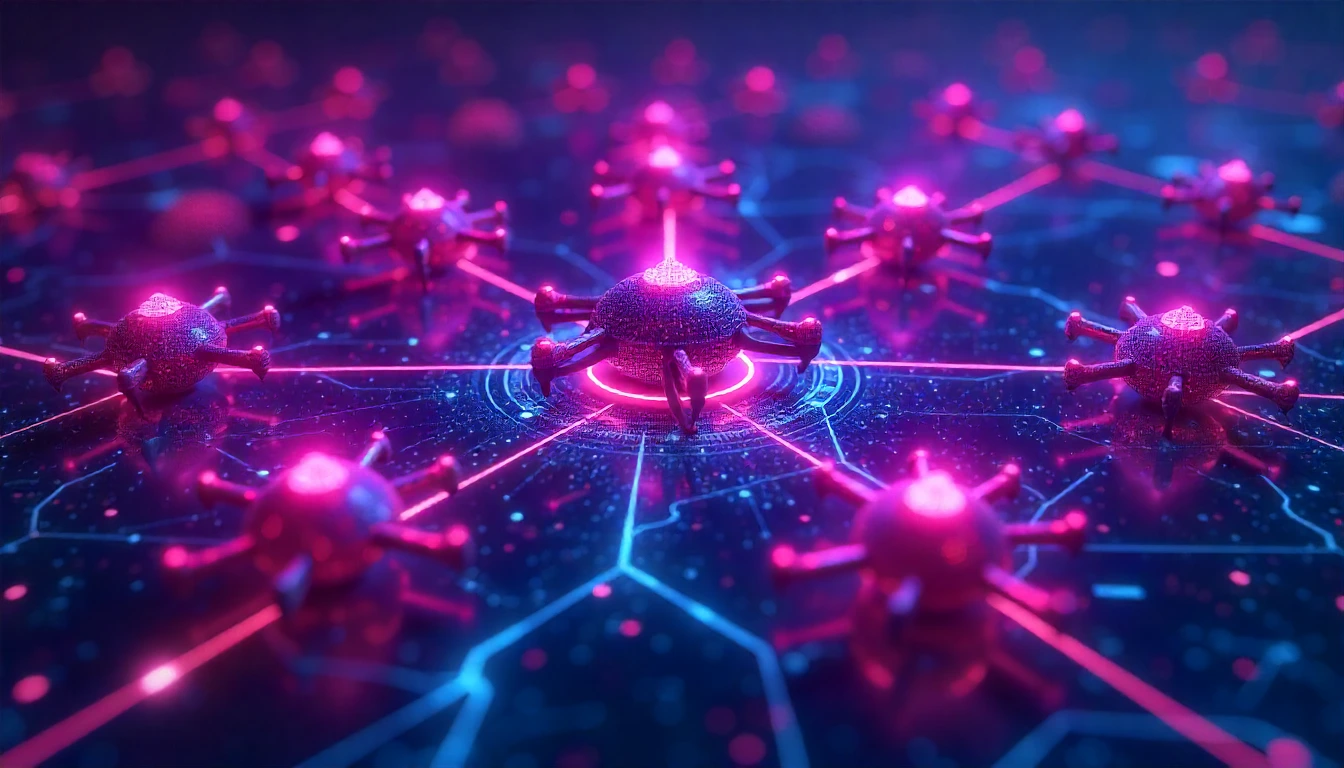

Distributed scraping is a data extraction method in which multiple networked devices, or nodes, work in parallel to collect data from websites or other online resources. By dividing the data collection workload among several machines, distributed scraping enhances the efficiency, scalability, and reliability of web scraping tasks, especially for large-scale operations involving extensive data. This approach is commonly employed in data science, big data analytics, and machine learning, where massive datasets from diverse online sources are needed for analysis, training, and model development.

Characteristics of Distributed Scraping

- Parallelism and Scalability:

Distributed scraping operates on a parallel architecture where multiple scraping agents or nodes perform data extraction simultaneously. By breaking down the scraping task and assigning it to different agents, distributed scraping allows the workload to scale linearly. This is especially beneficial when gathering data from large or frequently updated sources, as it significantly reduces the time needed to complete scraping tasks.

- Load Distribution:

The total scraping workload is divided among several nodes, each handling a specific portion of the target URLs, websites, or pages. Load distribution not only maximizes scraping efficiency but also mitigates the risk of being blocked by servers that monitor and restrict single-source requests. With distributed scraping, requests are dispersed across various IP addresses and regions, making the process less detectable.

- Fault Tolerance and Reliability:

In a distributed scraping system, failures in individual nodes can be isolated without disrupting the entire scraping operation. If one node fails, other nodes can redistribute its workload or continue independently. This architecture improves reliability, ensuring that data extraction continues smoothly even if some nodes encounter issues, such as network failures or IP bans.

- Distributed Datastores:

Data gathered from multiple nodes needs to be stored in a coordinated manner. Distributed datastores, such as NoSQL databases (e.g., MongoDB, Cassandra) or cloud-based storage systems (e.g., Amazon S3, Google Cloud Storage), are often used to aggregate and manage scraped data. By utilizing distributed storage, data can be efficiently accessed, queried, and processed across multiple systems in real time.

- Load Balancing and IP Rotation:

Distributed scraping often employs load balancers to manage requests across nodes and IP rotation techniques to assign different IP addresses to each node or scraping request. Load balancing ensures even distribution of requests across available nodes, while IP rotation prevents detection by web servers, reducing the likelihood of IP-based bans or CAPTCHAs.

- Concurrency Control and Task Coordination:

Concurrency control mechanisms are essential to manage the simultaneous operations of multiple nodes. Distributed scraping platforms frequently use task schedulers and orchestrators, such as Apache Kafka, Celery, or Airflow, to ensure coordinated task assignment, reattempts on failed tasks, and data consistency across all nodes. Task coordinators also handle dependencies between tasks, ensuring efficient progression through the scraping process.

Architecture of Distributed Scraping

Distributed scraping typically relies on a master-slave architecture, where a master node oversees task distribution and status monitoring while slave nodes execute the scraping tasks. The master node coordinates with slave nodes, dividing URLs, handling retries, and consolidating results. A centralized task queue can be implemented to enable the master to allocate tasks to nodes dynamically, ensuring optimal usage of resources. In addition, some architectures utilize a peer-to-peer model, where nodes share data directly, minimizing the dependency on a central coordinator.

In a typical setup, the process flow is as follows:

- The master node or task scheduler receives a list of URLs or target resources.

- The task scheduler assigns specific URLs to each slave node.

- Each slave node makes requests to its assigned URLs, extracts data, and sends it to a distributed datastore or the master node.

- If a node fails, the master node detects the failure and reallocates tasks to other nodes.

Mathematical Model of Load Distribution

For a distributed scraping system with `N` nodes and `U` total URLs, each node’s workload can be determined by distributing URLs equally, or by more complex load-balancing techniques if nodes have varying capacities. If URLs are evenly distributed:

URLs_per_node = U / N

This formula assigns an equal number of URLs to each node, ensuring balanced resource allocation. If a node can handle `C` concurrent requests per second, then the estimated time `T` required to scrape all URLs by a single node is given by:

T = (URLs_per_node) / C

Thus, by increasing the number of nodes `N`, the overall scraping time can be reduced linearly, optimizing for performance and speed.

Distributed scraping is essential for applications in big data and machine learning that require high-volume, real-time data acquisition from web sources. For example, it is widely used in aggregating social media data, news, or financial information, where datasets need to be constantly updated to ensure relevant insights. This real-time data can then be fed into data pipelines, where it undergoes preprocessing, analysis, and integration for training machine learning models or supporting real-time analytics.

In AI-driven environments, distributed scraping also facilitates continuous data acquisition, supporting dynamic learning systems that rely on the latest data inputs. By deploying distributed scraping solutions, AI models can have timely access to diverse and large-scale datasets, enhancing the accuracy and relevance of predictions, recommendations, and analyses.

In summary, distributed scraping enables efficient, scalable, and fault-tolerant data extraction by coordinating multiple nodes to operate in parallel. This method is crucial for handling large datasets across numerous sources and is a foundational capability in data science, big data, and AI applications. The structured load distribution, fault tolerance, and task orchestration inherent to distributed scraping provide the resilience needed for robust, high-performance data acquisition systems.