It is not a miracle or fraud but a modern possibility. Web scraping is a technology for obtaining a given type of data from sites by requesting a server via the HTTP protocol using the Get-method using a code. The process of business leads scraping consists of three operations — bypassing the site, collecting information, and analyzing it. Manual searches took days and minutes with a boxed or cloud version of the business leads scraper service. The main thing is not to go beyond available information, and it helps not to break the law.

Which is correct: scraping or parsing?

Since data collection in the case of business leads scraping directly affects the work with signs, first, we need to deal with the difference in the meanings of meta-words. The word parsing has been fixed in the vocabulary of the Eastern European audience to search and collect data on specific parameters from sites. Western B2B marketing experts call this scraping. Both definitions can only do with a crawler. So, who is who in this triangle?

- Parsing

It is a method of analysis in which business leads information is parsed into its constituent parts. The result is then converted into a user-friendly format. Parsing can collect business leads data by automation in different forms. It identifies the address, sends a request, finds the required data in the code, converts it, and exports it.

- Crawler

The previous procedure was only possible with a search robot, a crawler. It is the central part of the search engine, which iterates through pages and enters data into the business leads database. Previously, the crawler was "packed" into the concept of "parsing," but the importance of the search structure brought this algorithm into a separate term. The crawler "opens" the site, and the parser analyzes the search results.

- Scraping

To not produce entities according to Occam's principle, the community came to a single term combining the previous two. Business leads scraping involves not only automatic but also a manual collection of information. An essential component of technology is downloading and viewing the pages. But initially, they were created for the best UX. The generation of the scraping process "cleans up" the HTML code to produce the content by automation technology.

That is, initially, parsing and scraping meant the same thing, and now the first is one of the functions of the second. Leading Data Engineers use it on an industrial scale.

Scraping is convenient and cheap

Business leads scraping is vital from a theoretical and practical point of view because it uses the main advantages of the internet itself:

- a large amount of information

- high data availability

- global system

- ease of data dissemination

- speed of information dissemination

Since it has become easy and cheap to upload information as content to web pages, business leads data collection does not present problems with complexity and costs. The global nature of the system allows you to analyze the collected data since the coverage is maximum objective. The business leads scraping speed is also high and plays to its advantage to increase the number of successful applications.

How lead scraping works

Business leads scraping, like other types of this process, can be divided into the following stages:

- definition of a site group and required data lightning

- creation of a bot according to the terms of reference for the project

- retrieve data in HTML format using GET-request or load on demand

- business leads data parsing and cleaning — carried out both during and after scraping

- formatting structured results in JavaScript Object Notation or other formats

- reconfiguring the web scraper by changes on the processed sites

There is an ongoing development of the tool that makes it easier to carry out the collection of lead data. There are ready-made scrapers, proxy servers to bypass blocking, and scrapers that perform all tasks on a turnkey basis. Interested in the update? Book a call and we'll tell you what's what.

Simple management of a complex process

The process of searching and processing business leads data can be considered from the user's point of view. Let's say this is the manager of a pharmaceutical company who wants to collect data on pharmacies to offer potential customers and business leads a new product. It is what is called business leads scraping.

First, search the engine scraper and enter a query like "pharmacy + clinic + high cranial pressure." The interface will have a settings button. You can enter the number of business leads results, for example, the first ten. Additionally, you can assign a language and geolocation if the search needs to be limited to these parameters. Next is the start button.

After that, we get links and select the option "phone numbers" in the panel. The numbers of business leads will appear in the columns. If the manager had the necessary site addresses in advance, he could enter them manually and get phone numbers.

In the end, the results are saved in the required format. It will save a lot of time, especially with many searched sites. If there are a hundred business leads, imagine how much a manager would take to collect such information manually.

Scraping Software Market Dynamics

Beyond the UI

As in any other profession, masters of lead scraping use specific tools in their work. These are tools that deliver data from sites, and these tools are "sharpened" for the tasks at hand. The goal is to increase the efficiency of collecting business lead information. The options are a browser extension, an author's code, or a web application. One of the main functions is the automatic filtering of content according to the specified parameters.

These tools can be sorted out in the workshop on the shelves according to the following criteria (the list is not complete):

- general bypass — an open platform for extracting business leads data from a repository rather than from the internet itself in real-time

- content capture — uses an application programming interface to view and select the desired content visually

- data transformation — for example, the so-called DiffBot extracts information and turns it into a database accessible to human perception

- creation of search robots for the selected model of scanning business leads data for use in different libraries and programming languages

- lead scraping without coding — point-and-click cloud platform

- open-source page scanning — uses Python code with additional settings to edit code scripts

- site blocking prevention — automatic rotation of a large number of proxies helps to extract scraping lead data and clean up search results

Also are popular: creating robots for multiple operating systems, purely financial scraping tools, scraping the internet to optimize search, etc. Take the tool that is best suited for a particular task.

Hotkeys for cold outreach

The advantages of business leads scrapers over manual data collection for any market are essentially the same as that of a tractor over a medieval peasant. More work is done faster and more efficiently — the machine does not need rest and food breaks.

Faster and more productive

The program will perform much more actions than a person within the same task for the same unit of time. It means that much more tasks can be performed automatically than manually. And these are the same person-hours in which the volume and work cost is calculated. The manager will need days to collect primary business leads for cold outreach. Business leads scrapers will do it in minutes.

The program does not get tired

Even the most experienced and conscientious employee can make mistakes when doing manual lead scraping. Even if he is not tired, his eyes may "blur" when searching for a large amount of the same information. An error in one digit of the lead phone number nullifies the entire search on the page. In addition, a person may need to keep in mind all the specified lead parameters, can accidentally simulate a DDoS attack, or miss the desired address of a potential lead.

Until others figured it out

Business leads scraping is a relatively new way of collecting a large amount of data, only some know about it, and only some people use it for lead search. A company that uses lead scraping today has a competitive advantage over those that will do it tomorrow. Only by this time the lead scraper will collect the necessary information, and the manager will convert it.

A mistake can be made when setting parameters to the tool, and its use costs money. But this is a cost, not a drawback, and the probability of error, in this case, is relatively low. Select what you need and schedule a call.

Not everything can go smoothly

In such articles for the information balance, it is customary to indicate disadvantages and advantages. But a well-made product according to the technical specifications has no shortcomings; there may be errors that are fixed by testers. In the case of business leads scrapers, problems can arise with the data collection process itself. But if you do not cross the red line, there will be no complaints with lead searching.

Moral and ethical standards

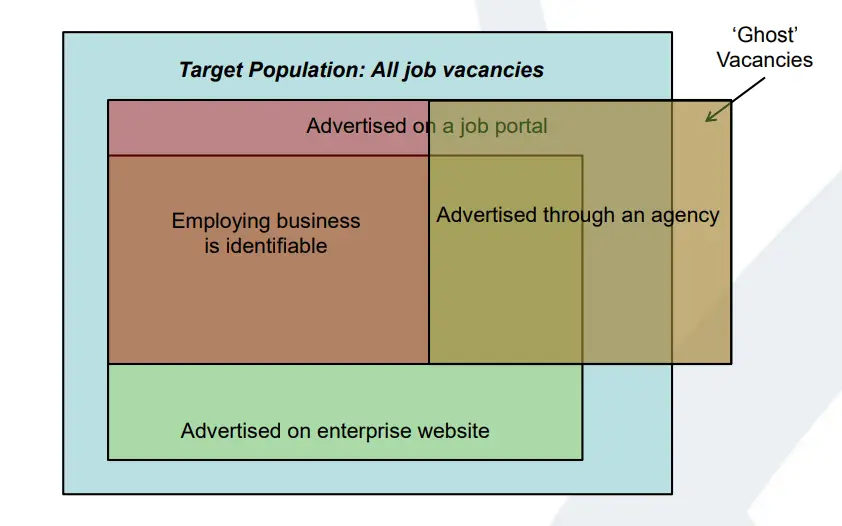

The expression "bypassing the site" at the first reading arouses suspicion — is it legal? If not — what can make the delivery legal? Here is a practical manual analog for collecting lead data. What can be taken by hand can be done with code since lead data is in the public domain. You can't scrape:

- personal and residential data of the lead — from the account or registration

- for DDoS attack

- information that is protected by copyright

- information about state or commercial secrets

From a legal point of view, responsibility even for unscrupulous lead scraping is very vague since the rights to one piece of information belong to many people: the content author, page owner, commentator, etc.

No deviation

Making decisions based on big data requires high-quality lead information. It means such details:

- completeness of coverage

- data accuracy

- relevance of lead information

Business leads scrapers are just tools, and they get the job done. Obtaining low-quality data is often associated with an incorrectly set task or inaccurately entered lead search parameters.

Overcoming technical barriers

Since not all site owners are optimistic about visiting their resources by business leads scrapers, they can set obstacles: change the structure of the page code, set a captcha for authorization, block by traffic volume in a time unit, etc. Without crossing the indicated red line, these difficulties can be overcome thus:

- take a screenshot and get lead data from the image (maps scraper case)

- transfer work with a captcha to a third-party service

- extend the scraping process over time

- imitate the behavior of a live visitor

While identifying sources, their owners can apply several protection methods against business leads scrapers simultaneously. It requires constant monitoring and adjustment of lead search tasks.

Choosing a scraper depending on the task

Automated collection of big data is helpful in any branch of human activity. It is the clay from which you can make the idol of Easter Island or a small elegant netsuke. And the form is the specific target for which the sculptors of business leads scrapers work.

- marketers study prices, determine the target audience, find out the level of demand

- IT managers collect product change data, run tests, determine the significance

- copywriters fill entire virtual shelves of online stores with product cards

- top managers monitor the business activity of competitors and make technology forecast

- for private purposes, you can generate collections of songs, lessons, paintings

- site owners check their resources for SEO optimization and broken links

That is, the principles of operation of business leads scrapers are unchanged, but the input parameters change depending on your purpose.

Depending on the goal, business leads scrapers can perform the following tasks:

- monitoring — comparison of prices for the same group of goods relative to the closest competitors

- joint purchases — in social media, you can optimize the filling of the showcase, accounting for balances and prices

- online stores — loading goods from a supplier, monitoring prices and balances

- SEO analytics — analysis of the semantic core, keywords, competitor data

Custom solutions fall outside the general categories due to the uniqueness of the lead search task.

The difficult task requires Scraping as a Service

Let's imagine for a moment that this is not about business leads scraping but about scraping in the truest sense. If you need to wipe the dust off the table, use a handy tool — a sponge. And then wind dry with another device — a rag. But if this needs to be done with the entire apartment area, it isn't easy to do this even with the most convenient tools. You will have to call cleaning service specialists — they have special equipment, knowledge, and experience.

List of tools for business leads scrapers

When implementing a specific solution related to business leads scrapers, strategically determining the use of particular tools is necessary. Depending on the method of their work, they can be divided into the following groups:

- on the use of resources — the algorithm works on the side of the customer or contractor

- by access method: cloud and desktop solutions

- framework — on Python, JavaScript, etc.

Top-5, according to the popupsmart resource, looks like this:

- Bright Data is a scalable developer tool

- Apify is a powerful platform for crawling and automating sites without using code

- Scrape.do — the most efficient scraper with a fast application programming interface

- ParseHub — free sample of the desktop tool with great features

- Diffbot is one of the best for extracting data

You can figure out scraping yourself using DIY platforms. But firstly, this takes time, and secondly, such tools are used only in straightforward cases. And the experienced master with professional business leads scraping tools will make everything faster, more reliable, and more convenient.

Managed scraping as a service

In the case of business leads scraping, even ready-made tools need to be able to use. And if you need to use several instruments at the same time skillfully? And if you need to develop a new tool that is off the shelf for your task? And it must also have the necessary size and weight.

It is where scraping as a service performed by specialists comes to the rescue. Their main trump card is experience: they probably did something similar, so they will quickly and efficiently select an effective model for you.

Most often, leads scraping business involves two approaches to cooperation:

- One-time scraping collects data from web resources for an individual task, describing the collection parameters. It is a custom tool you can always use but performs strictly specified functions.

- High-load scraping engine is the production of a universal tool that can change parameters depending on the needs of a particular business. It costs more but is worth it: a high load implies more income.

Complex decisions require a high level of communication at every project stage. It is another benefit of managed scraping as a service.

Successful practices of using business leads scrapers

If the theoretical benefits of business leads scraping are undeniable, then there may be some rough edges in practice. They relate to moral, ethical, and legal standards, the level of quality of the lead data obtained, and technical problems in the use process. Practical problems require clear solutions; here are some from DATAFOREST.

Sell cheap

The customer asked to create an application for drop shippers — to show the best products and calculate the potential profit. The solution included creating user and admin parts (for subscription), a scraper for collecting prices in Shopify stores, high-load scraping algorithms for extracting data from AliExpress, Facebook, and TikTok, and artificial intelligence algorithms for economic indicators and profit margins.

The letter of the law

DATAFOTREST completed a cataloging assignment for a legal consulting company that wanted to manage, classify and store court files, documents, and contracts. An algorithm was proposed to select new files based on traffic during the daytime, producing updates at night. Proxy servers and artificial intelligence technologies were introduced to overcome bot protection and process 14.8 million pages daily (14 GB).

The case for 60 million

The client ordered automatic monitoring of prices and the stock availability of more than 100,000 products from more than 1,500 stores. Infrastructure spending levels are up to $1,000. DATAFOREST met the limit by creating monitoring using custom scripts through a distributed server architecture. An algorithm-based notification system was also implemented to monitor more than 60 million pages daily. The customer saved 1000 hours of manual work per month. The custom price arbitrage algorithm gave an additional $50-70 thousand/month profit.

Creativity for good

Creating a business leads scraper is mutually beneficial for customers and contractors. On the one hand, there is a high search efficiency and ease of use of the data obtained. On the other hand, studying different site architectures in the context of big data theory is of scientific interest. For the developer's capabilities to fully realize the client's wishes in lead generation, it is better to contact specialists with experience in creating universal and custom ready-made solutions. Such a company is DATAFOREST.

FAQ

What is the principle of operation of business leads scrapers?

Lead scraper tools collect and analyze lead data from many sites for compliance with the specified parameters. The results are presented in a form convenient for human perception.

What are the advantages of leads scrapers for business?

Business leads scrapers collect information from resources that do not have an Application Programming Interface and do not provide easy access to lead data from which information about a competitor can be obtained.

Is it legal to use business lead scrapers?

By default, business leads scrapers are legal, but copyright infringement and DDoS attacks are not allowed, and lead data can only be taken from the public domain.

How to choose the right business leads scraping software, especially for you?

It is necessary to correctly formulate the lead search task and choose a service to the given parameters. To avoid wasting time and money in vain, contact specialists from DATAFOREST.

What scraping practices lead to the best data quality?

You can structure an automated lead data quality control system using frameworks (universal and individual). They are ready-made blanks for your code that solves the problem with lead searching.

.webp)

.webp)