In the executive lexicon, "data-driven" has been a pervasive, almost platitudinous, term for the better part of a decade. We've built dashboards, hired analysts, and invested heavily in Business Intelligence (BI) tools, all in a relentless pursuit of harnessing the information within our own walls. But a fundamental truth is taking hold in boardrooms in 2025: the insights that create market leaders aren't buried in internal servers anymore. They are out in the open, scattered across the immense and chaotic landscape of public web data.

The focus is pivoting from introspection to external awareness. Knowing your own sales figures is table stakes; true advantage comes from knowing your rival's real-time pricing, decoding the chatter on social media that signals the next big trend, and spotting supply chain vulnerabilities before they become headline news. This is where the strategic application of web scraping stops being a technical curiosity and becomes a pillar of corporate strategy. But what is web scraping used for at this strategic level? It’s about building a real-time, high-fidelity map of your entire market ecosystem.

The New Gold Mine of 2025: External Data

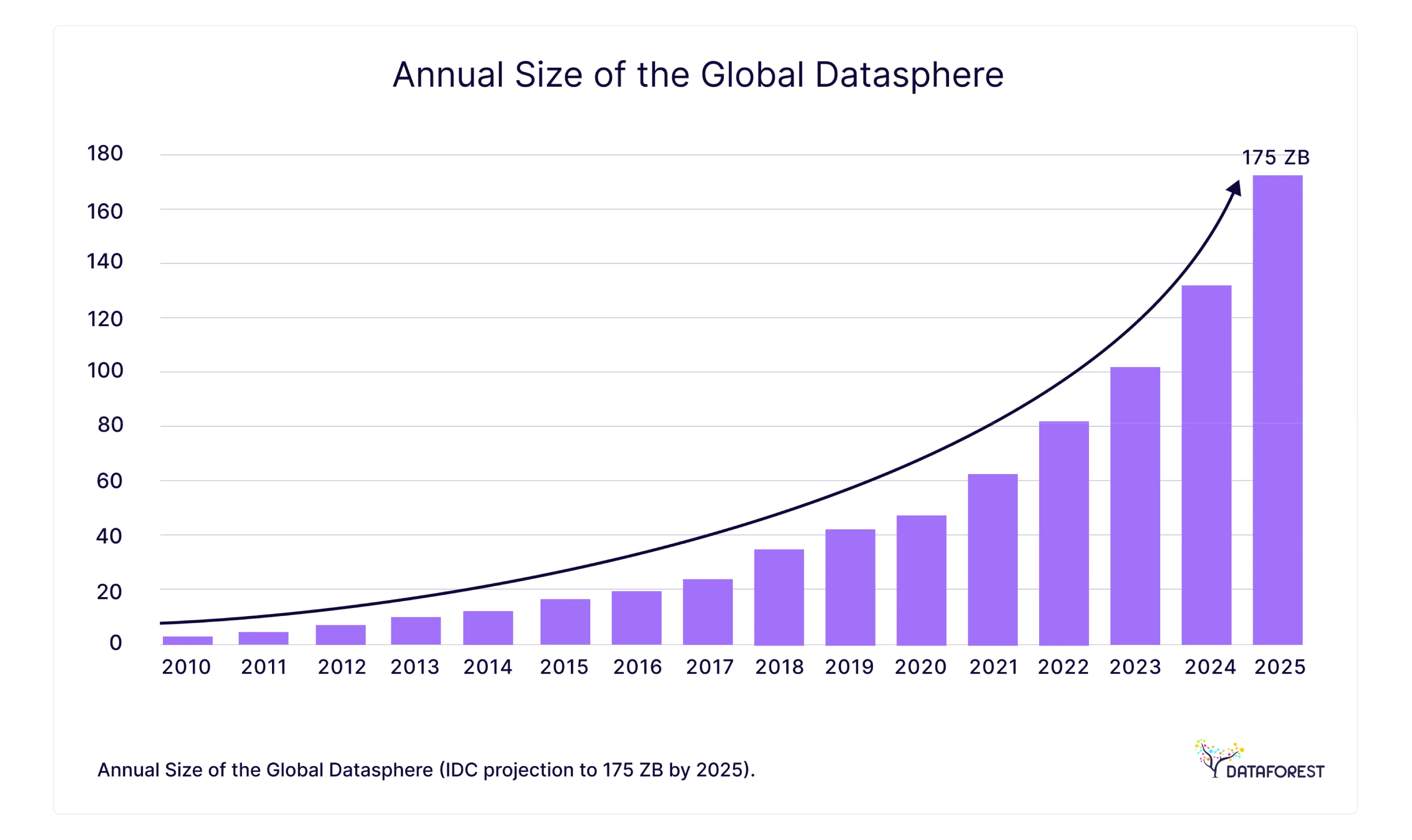

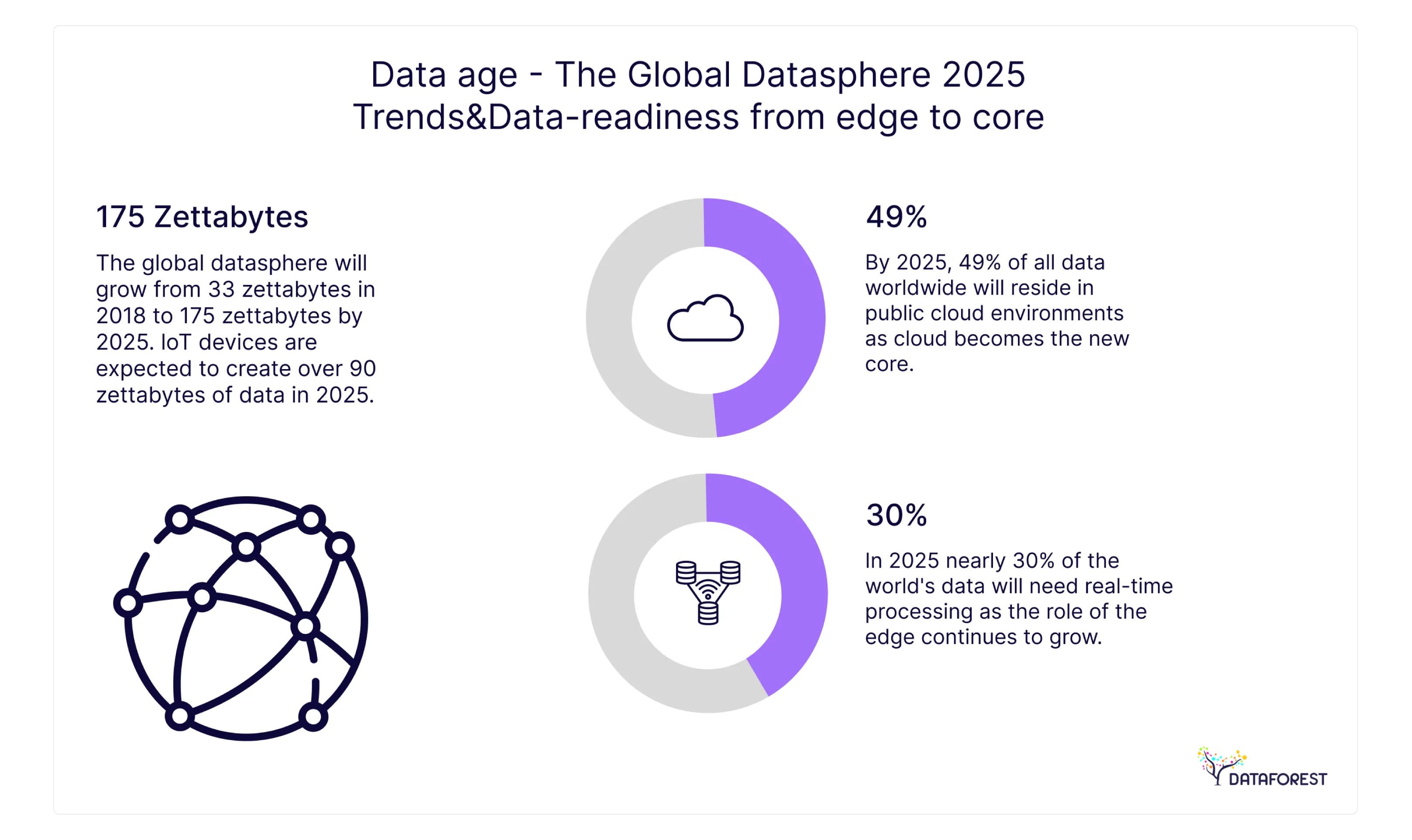

The global datasphere is expanding at a rate that defies conventional comprehension. IDC projects the global datasphere will reach ~175 zettabytes by 2025. A significant and ever-growing portion of this is public, living on multiple websites, from e-commerce platforms and real estate portals to social networks and regulatory filing databases. This isn't random digital exhaust; it's the raw material for market dominance, awaiting refinement into actionable intelligence.

For too long, strategy has been built on the comfortable foundation of internal data—sales history, customer files, operational reports. We supplemented this with the slow, filtered lens of traditional market research. These tools gave us a picture of what was. They are backward-looking by nature. External web data, accessed through sophisticated web data mining and scraping, delivers a live feed of your market as it is.

This shift is creating a new competitive chasm. A 2023 report from McKinsey underscores that companies embedding AI and external data into their core commercial functions are capturing an additional 5 to 15 percent of revenue and improving marketing ROI by 10 to 20 percent. This isn't an incremental improvement; it's a fundamental change in performance capability. Mastering the various web scraping uses has graduated from an IT task to a C-suite mandate, fundamentally impacting P&L and shareholder value.

Why Traditional Data Acquisition Fails Today

The strategic planning cycle of yesterday is dangerously out of sync with the market realities of today. The core challenge lies in the velocity, granularity, and scope of data required to make winning decisions. Traditional methods of data acquisition are failing on all three fronts.

- The Speed Deficit: Commissioning a market study takes weeks. By the time it arrives, your competitor has already launched a new pricing model, a viral social media trend has altered customer desires, or a regulatory filing has changed the rules of the game. Automated data collection via web scraping closes this gap, operating at the speed of the market and enabling nimble, decisive action.

- The Granularity Gap: Surveys and industry reports provide aggregated, high-level views. They can tell you the average price of a product in a region but can't provide the day-to-day dynamic pricing of your top three competitors on a specific SKU. They can't offer deep customer insights because the complex data analysis required to parse thousands of individual product reviews is simply not feasible with old methods.

- The Scope Limitation: Internal data, by its very nature, is a closed loop. It can tell you how your customers behave on your platforms. It cannot reveal why non-customers choose competitors, how your brand is perceived across the broader web—from social media to search engine rankings—or which emerging players are poised to disrupt your market. The scraping process breaks down these walls, providing a panoramic view of the entire competitive landscape.

In essence, relying solely on traditional data is like navigating a high-speed motorway using a map from last year. You might stay on the road, but you’ll miss the new exits, be unaware of the traffic jams ahead, and be completely blindsided by the new highway that just opened up.

How Web Scraping Powers Business Growth: 15 Proven Use Cases for 2025

The theoretical value of external data becomes tangible when you explore the specific use cases of web scraping across different industries. These are not futuristic concepts; they are proven, high-impact strategies being deployed by market leaders today to create a sustainable competitive advantage. What follows are some of the most compelling business cases for 2025.

SaaS & Marketplaces — Real-time competitor and pricing intelligence

In the cutthroat worlds of SaaS and online marketplaces, standing still is moving backward. You must have a constant, real-time pulse on your competitors' pricing tiers, feature updates, and customer feedback. A dynamic pricing strategy becomes possible when you scrape rival sites to deconstruct their offers and promotions. This intelligence lets you adjust your own positioning on the fly, protecting your market share without giving away margin.

Retail & Loyalty — Personalization based on external customer behavior

A customer's purchase history is just one chapter of their story. To build real loyalty, you need to understand their broader world. Ethically scraping public forums, interest blogs, and social platforms allows retailers to assemble a far richer picture of their customers. This fuels hyper-targeted promotions and product suggestions that reflect a person's actual lifestyle. The result is a powerful engine for advanced E-commerce analytics and true personalization. For an in-depth look at this, explore solutions for E-commerce Data Management.

Fintech — Chargeback trend monitoring and policy aggregation

The Fintech arena is a minefield of shifting regulations and novel fraud tactics. Financial data scraping is key here. By scraping public forums and regulatory bulletins, firms can get an early warning on new chargeback schemes. This extends beyond consumer finance into the stock market, where scraping alternative data can provide an edge in algorithmic trading and risk assessment, making it a critical component for comprehensive Fraud prevention.

Logistics — Fleet and asset monitoring from open data

For any company moving goods, visibility is the bedrock of efficiency. Web scraping can systematically pull open data from port authority sites, public traffic feeds, and weather services. This automated data collection builds a more complete operational picture than any single internal system can provide, leading to smarter routes, better ETAs, and a proactive stance against disruptions.

Job & Real Estate — Smart analysis of listings and vacancies

Real estate and talent markets are defined by a tidal wave of public listings. Professionals using powerful web scraping for real estate services can aggregate listings from countless real estate websites to perform deep real estate market trends analysis on pricing and time on market. This is a core application for modern Investment analytics that fuels smarter decisions.

FoodTech — Restaurant menu aggregation at scale

Food delivery and analytics platforms depend on a comprehensive, current database of menus. Web scraping is the only viable method for collecting menu items, prices, and ingredients from the fragmented landscape of independent restaurant websites. This data is the lifeblood of their search tools, nutritional calculators, and market trends analysis of the FoodTech industry.

TravelTech — Dynamic offers built from scraped tourism data

The travel industry is a high-velocity data environment. Prices for flights and hotels change by the second. Travel industry data scraping gives online travel agencies (OTAs) the power to present the most current options. A sophisticated web scraping usage is to monitor rival prices and automatically adjust your own offers to win the booking. This is the core of modern price monitoring and the essence of what advanced Price tracking software delivers.

Beauty & Lifestyle — Extracting trends from social & ecommerce

Beauty and lifestyle trends are born online. Through strategic Social media monitoring, which involves scraping Instagram, TikTok, and major retail sites (subject to each platform’s terms), brands can catch these trends at inception. This means spotting the next breakout ingredient or a shift in consumer sentiment analysis long before it shows up in traditional market reports, directly informing product and marketing decisions.

Healthcare — Monitoring drug prices and availability

As the healthcare industry moves toward greater transparency, web scraping becomes an essential tool. It can monitor the prices of prescription drugs across various online pharmacies, arming insurers and public health bodies with critical data. It also allows for tracking drug shortages on regulatory sites—a vital web scraping application example.

Insurance — Automated risk assessment from public data

Insurers are aggressively moving beyond traditional actuarial tables to enhance their Risk assessment tools with alternative data. Through web scraping, they can pull public data for a more nuanced view of risk. This could mean scraping municipal permit data for property insurance or news reports for signs of distress in a commercial client. This data can even be applied to proactive Cybersecurity monitoring by tracking mentions of vulnerabilities associated with a client's software stack. Explore more at our Insurance industry page.

Manufacturing — Supplier price and quality benchmarking

A resilient supply chain is an informed supply chain. Web scraping gives manufacturers a global view to benchmark comprehensive product data, including prices of raw materials and components, from various supplier catalogs and B2B marketplaces. It can also be used for supplier quality benchmarking by scanning industry forums for chatter about a supplier's reliability.

Education — Aggregating course offerings and reviews

EdTech firms and universities are in a fierce battle for students. They can gain a sharp competitive edge by scraping competitor websites for data on course catalogs, tuition fees, and student reviews. This market analysis uncovers gaps in the market and informs curriculum development, ensuring programs align with what students and the job market actually want.

Energy & Utilities — Market price monitoring and regulatory updates

Energy markets are defined by volatility and dense regulation. Web scraping offers a clear, real-time window into wholesale electricity and gas prices from public data exchanges. At the same time, automating the scraping process of regulatory commission sites acts as a watchdog, instantly flagging proposed rule changes so compliance teams can prepare and adapt.

Media & Entertainment — Monitoring content trends and audience feedback

Media companies can use web scraping to see what's truly resonating with audiences across the entire digital landscape. By applying sentiment analysis to social media comments and shares, they can get an unfiltered read on audience reaction to different content. This is a foundational element of active Online reputation management and informs content strategy to maximize engagement.

How AI Turns Web Scraping Into Strategic Intelligence

Getting the data is just the beginning. The real transformation happens when the raw, structured data pulled from the web gets infused with Artificial Intelligence and Machine Learning. This is the moment web scraping moves from a simple acquisition tactic to the fuel for a powerful strategic intelligence engine, creating true Big data solutions.

Beyond scraping — building intelligent data pipelines

A one-time data pull from a website provides a snapshot. A continuous, automated data pipeline provides a live, evolving understanding of your market. The goal is to move beyond a static, saved spreadsheet database and build dynamic systems that process automatically by continuously ingesting, cleaning, and structuring data from the web. These intelligent pipelines can automatically detect changes in a website's structure and feed information directly into your core business systems. This concept is at the heart of our custom data management and analytics solutions.

AI models built around your business context

Once the data is flowing, AI models can be trained to uncover insights that are invisible to human analysts. This fusion of web‑scale information and machine learning transforms raw inputs into predictive power, creating a feedback loop where market events are anticipated, not just reacted to.

Legal, Ethical & Scalable: What Businesses Must Know in 2025

Bringing web scraping into the enterprise demands a watertight strategy for compliance, ethics, and security. For the C-suite, managing risk is just as important as seizing opportunity. Any strategic partner in this space must deliver definitive assurances across the board.

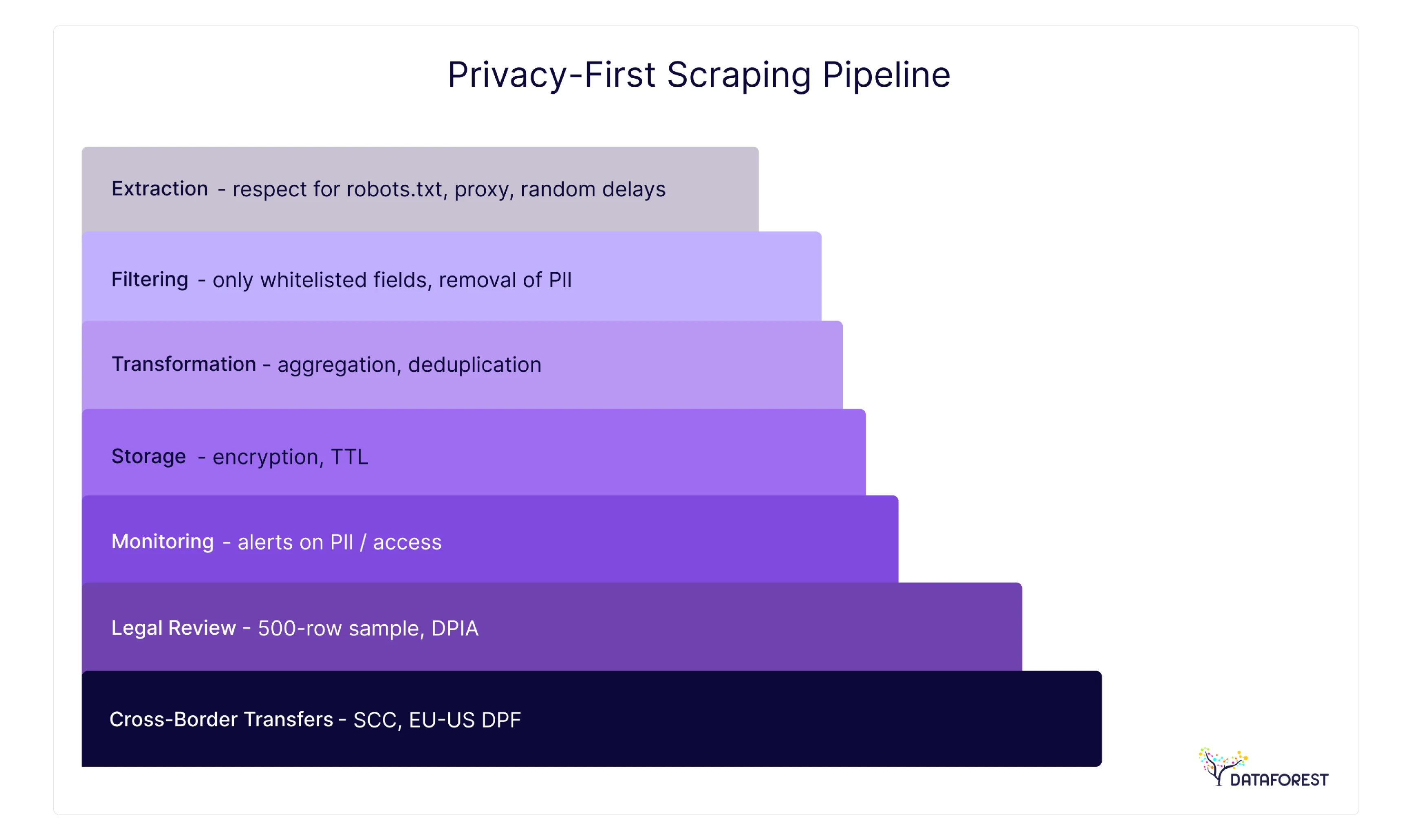

GDPR-ready, transparent, and enterprise-grade

The web of data privacy regulations, from GDPR in Europe to CCPA in California, is intricate and unforgiving. Any enterprise-grade web scraping usage must be built from the ground up on a "compliance-by-design" framework. The non-negotiables include:

- Focusing on Public Data: The foundational rule is to only access data that is publicly available, not locked behind a login or paywall.

- Respecting robots.txt: Following the robots.txt directives of a website isn't just a courtesy; it's a fundamental best practice for any ethical crawler.

- Avoiding Personal Data: For most strategic business cases, like price monitoring or market analysis, PII is irrelevant and an unnecessary risk. A properly architected scraping solution will be designed to ignore and avoid it. When sourcing business contact details for lead generation, strict adherence to all applicable privacy laws is paramount.

- Rate Limiting: A responsible scraping software or service never hammers a target website. By using intelligent scheduling and distributed networks, the scraping process avoids disrupting site operations, ensuring it remains an ethical and sustainable practice.

Trust & auditability for legal and security teams

Before a large organization can embrace web scraping, its legal and security teams will demand proof and confidence in the methodology. A credible partner must be prepared to offer:

- Transparent Operations: Full clarity on what data is being sourced, from where, and by what methods.

- Data Lineage: The capacity to trace any data point back to its original public source, creating an unimpeachable audit trail.

- Secure Data Handling: Ironclad protocols for data storage and transmission, including robust encryption and access controls.

- Indemnification: A partner willing to stand behind their methods and provide contractual assurances is a must for mitigating corporate risk.

Compliance and data protection are not obstacles; they are prerequisites for scalable and defensible business intelligence.

ROI Calculator: Quantifying Your Web Scraping Investment

The strategic arguments are compelling, but budgets are approved based on numbers. Any major investment must be justified with a clear-eyed business case. While a plug-and-play calculator isn't realistic, the framework for a cost-benefit analysis is straightforward.

Cost-Benefit Analysis Framework

Costs:

- Direct Costs: The fees for a managed data service like DATAFOREST or the all-in cost of an in-house team (salaries, infrastructure, software).

- Implementation & Integration Costs: The one-time effort to plug the data feed into your existing business systems.

Benefits (Value Generation):

- Cost Savings: Calculate the man-hours your team currently burns on manual data collection and research. Automating this with website crawling produces immediate, hard-dollar savings.

- Revenue Uplift: This is the primary value driver. Model the impact of a 1-3% margin gain from smarter pricing, or the value of new deals closed from automated lead generation using powerful lead generation solutions.

- Risk Mitigation: Assign a dollar value to the disasters you avoid—the cost of a major product stock-out, or a fine for missing a key regulatory change.

Industry Benchmarks and Performance Metrics

When building your case, leverage industry benchmarks. As mentioned, firms that excel at data and analytics are seeing tangible results. A pilot project can establish a baseline. For instance, track the conversion rate of leads from scraped data versus other channels. Measure the direct revenue impact of three pricing adjustments made based on scraped competitor data. These concrete metrics move the conversation from theory to P&L impact.

Break-even Analysis for Different Company Sizes

- Startup/SME: Break-even is often achieved fast by replacing costly manual work or by closing just a few deals from hyper-targeted, automated leads. The focus is on agility and cost-effective lead generation automation.

- Mid-Market: ROI here is about scaling intelligence. Break-even happens when dynamic pricing and competitive monitoring deliver margin gains that eclipse the service cost.

- Enterprise: Value is strategic—risk reduction, supply‑chain visibility, and fuel for large‑scale AI and data machine learning programs. Break-even is a portfolio-level calculation, where a tiny efficiency gain yields millions in value.

Choosing Your Path: Off-the-Shelf Tools vs. Managed Solutions

Once the business case is clear and the ROI is compelling, the next logical question is how to execute. The market offers a spectrum of options, from DIY frameworks for internal development teams to user-friendly platforms. For teams still exploring, several well-known services provide a starting point:

Popular scraping services:

- Oxylabs —best for businesses requiring large-scale scraping capabilities.

- Octoparse — easy-to-use; suitable for non-technical users.

- ParseHub — works well with dynamic content.

- Apify — run both ready-made scrapers and custom scripts.

- Bright Data — ideal for large-scale data collection via proxies and IP rotation.

- Scrapy — best when you have a developer who can build custom spiders in Python.

Of course, if you have a complex data structure, non-standard sources, or require regular integration with a CRM, business-intelligence systems, or internal databases, simple scraping utilities will no longer suffice—and that is where the divide between a generic tool and a strategic solution becomes critical.

What DATAFOREST Offers: Custom Web Scraping Solutions for Data-Driven Companies

Understanding the potential of web scraping is one thing. Executing it at an enterprise level is another challenge entirely. It requires a blend of deep expertise, robust technology, and a partnership model grounded in trust. This is the DATAFOREST approach. We deliver fully managed, custom data-as-a-service.

15+ Years of Expertise in Data Engineering and AI

For over 15 years, the DATAFOREST team has been in the trenches, building sophisticated data pipelines and AI models for highly demanding global companies. We don't come from a world of simple scraping tools; our background is in architecting resilient, scalable, and compliant big data solutions. That depth of experience, which we share on our blog, means our clients get more than just data; they get reliable, structured intelligence.

No Templates — Only Fully Custom Solutions

Your business is not generic, so your data solution shouldn't be either. DATAFOREST doesn't do one-size-fits-all templates. We start with your specific business cases and architect a data pipeline tailored to your strategic goals. Whether you need to track ten websites or ten thousand, we build the exact solution you need, as seen in our past projects.

Who We Work With

We partner with C-level executives, VPs of Strategy, and Heads of Data at mid-market and enterprise companies who recognize that external data is a critical competitive asset. Our clients span a wide range of industries, from e-commerce and retail to finance and healthcare. They come to us when off-the-shelf tools fail and they need a reliable, scalable, and legally sound way to power their decisions with external data.

Your team stays in control — we bring the muscle

Our model is built to empower your team, not replace it. Your strategists and analysts define the intelligence they need. We provide the heavy-lifting infrastructure—the crawlers, the data-structuring engine, the delivery pipeline, and the 24/7 maintenance. We manage the complexity of the scraping process so your experts can focus on finding insights, not fighting for data.

Our edge: speed, flexibility, compliance

The DATAFOREST advantage boils down to three commitments:

- Speed: We deploy custom data feeds in a fraction of the time it would take to build them internally.

- Flexibility: Your strategy will evolve, and our service evolves with it. We can re-tool targets, add new data points, and scale on demand.

- Compliance: We operate with an unwavering focus on legal and ethical frameworks, giving our clients the confidence to act decisively on web data.

Meet our team to understand more about our approach.

Charting Your Course in the Data-Driven Future

In 2025, the strategic uses of web scraping are no longer optional—they are a core function of a competitive business. The capacity to see your market with perfect clarity, to anticipate competitor moves, and to hear the unfiltered voice of your customer is the ultimate unfair advantage.

The question for leaders is no longer if they should leverage public web data, but how to do it at scale, at speed, and with absolute confidence. The journey starts by identifying your most critical intelligence gaps and engaging a partner who can build the bridge to that data. The companies that move now to master this new domain will be the ones that write the rules for the next decade of business.

If you're ready to explore how a custom data solution can power your strategy, we invite you to get in touch with our experts.

Frequently Asked Questions (FAQ)

What business outcomes can web scraping directly influence in 2025?

Web scraping can directly influence several key business outcomes. These include increased revenue through dynamic pricing strategy and competitive intelligence, improved profit margins from supply chain optimization, higher customer retention via deep customer insights and personalization, and accelerated growth through automated lead generation. It also significantly mitigates risk by providing real-time compliance and data protection monitoring.

How does web scraping compare to traditional market research or BI tools?

Traditional market research is slow and provides a static, historical snapshot. Internal BI tools are limited to your own company's data. Web scraping is the connective tissue between the two. It provides real-time, granular, and comprehensive data from the external market—something neither traditional research nor internal BI can offer. It complements these tools by feeding them the live, external context they lack. For more on this, check out our guide to Market Research and Insight Analysis.

Can web scraping help us identify and act on market shifts faster than competitors?

Absolutely. This is one of the primary web scraping use cases. By setting up continuous monitoring of competitor websites, news outlets, and social media, you create an early warning system. You can detect a competitor's new product launch, a sudden shift in consumer sentiment, or emerging supply chain disruptions the moment they happen, allowing you to react strategically while your competitors are still waiting for their quarterly reports.

What are the most valuable web scraping use cases for marketplaces and e-commerce?

For ecommerce websites and marketplaces, the most valuable applications are real-time price monitoring, stock availability monitoring, and product assortment analysis. Scraping allows you to ensure your prices are always competitive without sacrificing margin. It also lets you analyze competitors' product catalogs to identify trending products and assortment gaps you can fill. A key example is detailed in this e-commerce scraping case study.

What’s the difference between one-time scraping and continuous data pipelines?

A one-time scrape is a single data extraction project, useful for a specific analysis, like a snapshot of the market for a presentation. A continuous data pipeline, which is what we specialize in at DATAFOREST, is a live, ongoing service. It constantly monitors sources, extracts new data as it appears, and feeds it directly into your systems. This allows you to track market trends analysis and other metrics over time and power real-time decision-making engines.

Can scraping be combined with machine learning to generate deeper insights?

Yes, this combination is the frontier of business intelligence. Web scraping provides the high-volume, high-velocity data that machine learning models need to thrive. You can use this combination for predictive pricing, advanced sentiment analysis, fraud detection, and identifying non-obvious correlations in the market. This synergy between scraping and AI is what turns raw data into true strategic foresight.

.webp)