.webp)

Streamlined Data Analytics

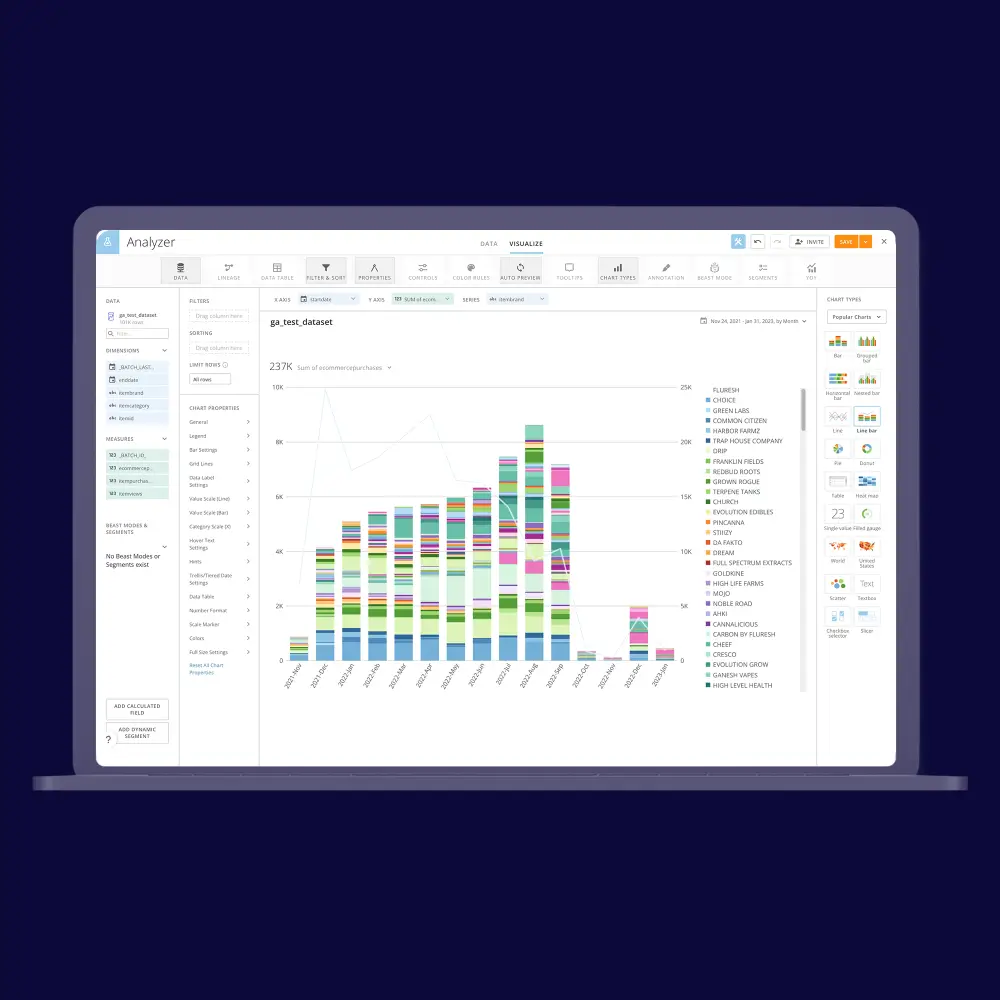

We helped a digital marketing agency consolidate and analyze data from multiple sources to generate actionable insights for their clients. Our delivery used a combination of data warehousing, ETL tools, and APIs to streamline the data integration process. The result was an automated system that collects and stores data in a data lake and utilizes BI for easy visualization and daily updates, providing valuable data insights which support the client's business decisions.

1.5 mln

DB entries

DB entries

4+

integrated sources

integrated sources

.webp)

About the client

LaFleur marketing is a digital marketing partner that creates innovative, data-driven marketing strategies and assets for law firms, healthcare organizations, technology, and growing businesses.

Tech stack

Python

SQL

Redshift

AWS Lambda

Cloudwatch

Their communication was great, and their ability to work within our time zone was very much appreciated.

How we provide data integration solutions

Latest publications

All publicationsLatest publications

All publicationsWe’d love to hear from you

Share project details, like scope or challenges. We'll review and follow up with next steps.

.webp)