A hospital deployed an AI agent collaboration system that flagged patients at risk of sepsis, but clinicians quickly learned the model couldn't explain why it raised an alert—so they ignored it. The team rebuilt the system to surface specific vitals, lab trends, and timing context alongside predictions. It turned the AI into a diagnostic partner that nurses and doctors could interrogate, override, or trust based on clinical judgment. Sepsis detection improved by 30%, but more importantly, physicians stopped working around the AI and started working with it because the AI agent collaboration system respected their expertise. We can consider your case—just schedule a call.

What Happens When AI Stops Following Orders and Starts Contributing?

Forbes stresses that human–AI team collaboration must be calibrated: humans remain crucial, especially in edge cases, oversight, and governance. AI doesn't replace people anymore—it argues with them, fills gaps they can't see, and sometimes knows when to wait. The shift from automation to AI agent collaboration alters the decision-making ownership.

Automation That Doesn't Think

Early AI integration in workflows handled repetitive work. It executed predefined rules without deviation. If the task changed, the system failed or produced garbage—no context awareness. No judgment calls. You pointed it at invoices or image labels, and it processed them until an issue arose. That was the deal: speed in exchange for rigidity. Humans still make every real decision.

The Rise of Autonomous AI Agent Collaboration

Autonomous agents don't wait for instructions—they act on goals you define once. They evaluate options, chain together steps, and execute without checking back. An autonomous AI agent might scrape competitor pricing, adjust catalog entries, and then trigger restock orders while you're offline. The problem is that agents optimize hard toward objectives, but they can't see business constraints or the edge cases you'd catch in seconds. You gain speed. Oversight becomes your new bottleneck.

Multi-Agent Collaboration

Multi-agent AI collaboration splits work across specialized roles that communicate. One watches pipeline health. Another decides when to retrain models. A third surface drifts patterns to the data team. They share state, negotiate conflicts, and escalate only when they can't resolve them themselves. No single agent controls the outcome; instead, they defer based on who has better context. You stop managing individual tasks and start auditing the handoffs, tuning how agents prioritize and when they ask for help.

Why Does AI Need to Argue Back to Be Useful?

Collaborative AI doesn't just execute—it questions assumptions, fills gaps you didn't see, and sometimes refuses to act until you clarify intent. Most automation fails because it can't adapt to shifting contexts. True AI agent collaboration means the system pushes back when your request conflicts with reality.

What Collaborative AI Means

Collaborative AI doesn't just execute commands—it participates in decisions. The system interprets incomplete input, flags contradictions, and proposes alternatives when your request conflicts with available data. It maintains context across sessions, so it remembers what failed last time and why. You can override it, but it surfaces the trade-off before you commit. The AI team management works because the AI knows when it's operating outside reliable bounds and says so.

How Collaboration with AI Differs from Human Teamwork

Human teams negotiate. AI systems optimize within the bounds you set. The AI communication models work when those bounds are explicit and the AI knows when it's operating outside them.

Schedule a call to explore how a profitable collaborative AI solution can enhance your business operations.

What Changes When AI Stops Waiting for Instructions?

Enterprise software still works like a vending machine—you press buttons, and it delivers outputs. Autonomous workflows don't wait for the full spec. It fills gaps, catches errors mid-process, and routes decisions to the person with the most relevant context.

Decisions That Happen Faster Because the System Fills Gaps

Decisions stall because someone's waiting on a report or a clarification. AI agent collaboration doesn't wait—it pulls the data, flags conflicts, and surfaces options with trade-offs attached. A pricing analyst doesn't need to request competitor benchmarks manually. The system monitors shifts, correlates them with margin targets, and queues recommendations when thresholds break. You still approve the change. But the legwork—pulling CSVs, checking historical patterns, calculating impact—happens without a ticket. Speed comes from eliminating the handoffs where context gets lost. Decisions move from weekly reviews to same-day responses because the prep work runs continuously in the background.

Automation That Adjusts When Conditions Change

Traditional automation breaks when inputs drift. Intelligent workflow automation notices the drift and adapts—or escalates if adaptation would violate constraints. An invoice processing system doesn't just extract line items. It tracks vendor format changes, flags anomalies against historical patterns, and reroutes exceptions to humans only when confidence drops below a threshold you set. This matters at scale. You're not maintaining brittle rules that fail every time a supplier tweaks their PDF layout. The system learns what "normal" looks like for each vendor and adjusts extraction logic accordingly. Failures still happen. But they're routed intelligently instead of crashing the entire batch.

Operations That Scale Without Proportional Headcount

Growth usually means hiring more people to handle volume. Collaborative AI breaks that relationship. A support team's scalability with ticket volume is not linear because the system only triages, drafts responses, and escalates edge cases requiring human judgment. Customer success doesn't need twice the headcount when customer count doubles— the AI-driven workflows monitor health scores, flag churn signals, and queue outreach based on behavioral shifts. You still need skilled people. But they're spending time on high-leverage problems, not repetitive triage. The operational leverage comes from the business automation with an AI agent collaboration handling the structured work and routing ambiguity to people who can actually resolve it. Headcount grows with complexity, not volume.

Product Iteration That Moves Faster Because Feedback Loops Close Earlier

Some product teams ship features, then wait weeks for enough signal to evaluate them. Collaborative AI development closes the feedback loop during development. An ML product manager doesn't manually aggregate user sentiment from support tickets. The AI agent collaboration system clusters complaints by feature area, correlates them with usage drop-offs, and surfaces patterns while the sprint is still active. Engineers don't wait for QA to file bugs. The single agents watch session replays, flag crashes or abandoned flows, and link them to recent commits. You catch mistakes before they compound. Design iterations happen in days instead of quarters because the signal that usually takes weeks to aggregate is surfaced in real time. Faster cycles mean fewer wrong bets that waste entire sprints.

A Question as You Read About Collaborative AI Agents

What Runs When AI Agents Work Together?

Collaborative AI operates on AI agent frameworks that chain tool calls, AI orchestration layers that route messages between agents, and data infrastructure that remains stable even when multiple agents query the same table. Most of the complexity lives in making those pieces talk without creating race conditions or losing context mid-workflow.

Frameworks That Let AI Agents Chain Actions Without Hardcoding

AI agent collaboration frameworks handle the scaffolding—parsing goals, selecting tools, retrying failures, and maintaining state across steps.

- LangChain wraps LLMs with memory and tool connectors, eliminating the need to write boilerplate for every API call or database query.

- AutoGPT autonomously breaks high-level goals into subtasks—"analyze competitor pricing" becomes scraping, parsing, and comparison— without requiring you to define each step.

- MetaGPT assigns role-based sub-agents within a workflow: one acts as architect, another as QA, simulating how teams divide work.

For enterprises, these frameworks matter because they standardize how multi-agent coordination talks to internal systems—your CRM, warehouse, ticketing tools. You can configure which tools an agent can access, eliminating the need to build custom integrations per workflow. The trade-off is that abstraction layers introduce latency, making debugging more challenging when a chain fails after three steps.

Routing Logic That Decides Which AI Agent Collaboration Handles What

AI orchestration in enterprises manages how agents talk to each other. One agent can't just call another—there's a coordinator that reads intent, checks who's available, and routes based on capability. This matters when agents specialize: one writes SQL, another pulls documents, and a third validates compliance. Without AI agent collaboration orchestration, they duplicate work or get stuck waiting on each other. The layer also handles retries. If the first agent times out, the system reroutes or escalates to a person. When an agent hands off mid-task, the next one inherits context—what's been tried, what broke, what constraints still apply.

Data Systems That Handle Agent Load Without Falling Over

Agents query differently from dashboards. They repeatedly hit the same tables, write the intermediate state, and read from a potentially stale cache. This creates lock contention and race conditions that are not typically encountered with human-driven queries. To isolate AI agent collaboration traffic from production, separate replicas, staged writes, and event streams that they consume, rather than direct database hits. Lineage matters more here than anywhere else. When an agent bases a decision on stale data, you need to trace which pipeline fed it and whether that pipeline's still running correctly. Most teams bolt agent-based modeling onto their existing warehouse, only to find they can't figure out why latency spikes. Treat agent-based systems as a separate workload class—dedicated compute, rate limits, full observability. Log every query, track every dataset touched, alert when an agent starts reading tables it shouldn't see.

Why Do Teams Stumble with AI Projects?

Engineers continue to hit the same wall with AI coordination. Not because the math is hard—most teams just can't feed their models clean data. Your developers are already aware of this, but management needs to hear the raw truth.

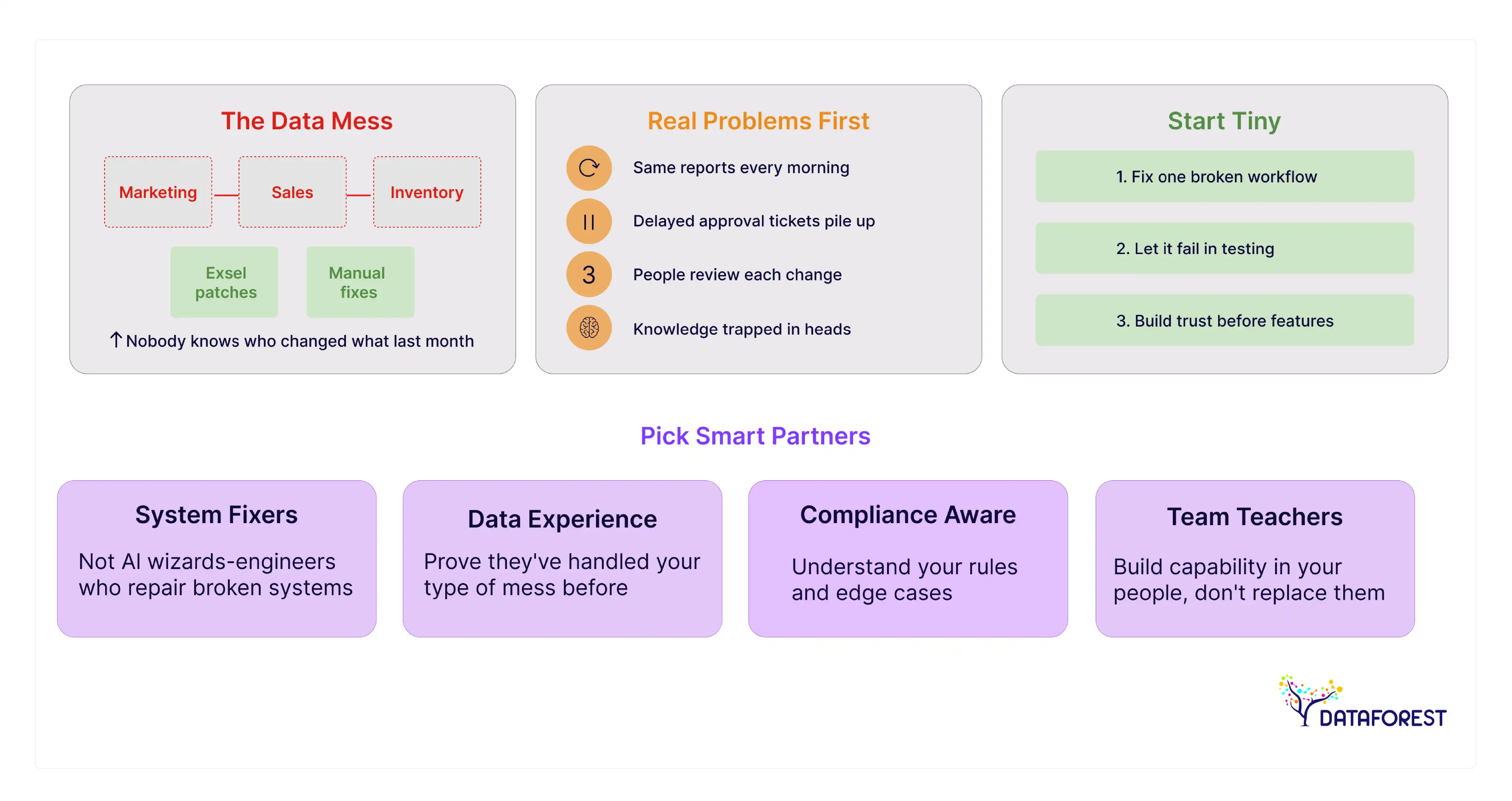

The Data Mess

Look at your dashboards. Different teams track inventory their own way. Marketing data doesn't match sales numbers. Someone changed a database field last month—nobody knows who. Excel sheets fill the gaps between systems. AI agent collaboration cannot fix this without structured inputs.

Real Problems First

Watch where work actually breaks down. Is that analyst running the same reports every morning? Pure waste. Support continues to receive tickets about delayed approvals. Three people must review each configuration change. Knowledge remains confined to the senior staff's minds. AI agent collaboration will only succeed if these root problems are addressed first.

Start Tiny

Fix one broken workflow. Get it stable. Let it fail in testing—you'll learn more. Your team needs time to trust the AI agent collaboration system. Skip the fancy features until the basics work.

Pick Smart Partners

Don't hire AI agent collaboration wizards—find engineers who fix broken systems. Make them prove they've handled your type of data before. Check if they understand your compliance rules. Watch how they react to edge cases. Good partners teach your team instead of replacing them.

What Will Human–AI Hybrid Teams Achieve?

Digital transformation with AI stalls on tooling, not intent. Teams fragment when ownership, feedback, and failure modes aren’t clear. Define roles early, and the AI agent collaboration system stops being a curiosity and becomes predictable work.

From Coexistence to Co-Creation

Humans keep judgment and context. AI agent collaboration handles repetitive inference and draft work.

- Make explicit handoffs: who curates prompts, who validates output, and who owns final decisions.

- Expect model drift when usage changes; implement a drift detection instrument and automate alerts.

- Keep a simple feedback loop that converts corrections into labeled examples.

- Optimize for latency and throughput separately. Low-latency tasks require different architectures than those for bulk reprocessing.

- Lock down data lineage so auditors can trace outputs to inputs and model versions.

Outcome: repeatable cycles where AI agent collaboration increases throughput without degrading accountability.

Towards collective intelligence

Collective intelligence with AI treats AI-agent collaboration as another team member with defined capabilities.

- Map skills to artefacts: what the model can propose, what humans must approve, and what requires escalation.

- Run controlled experiments to measure contribution value per role and per task.

- Use scorecards that combine human judgment and model confidence to route work.

- Invest in AI collaboration tools for teams: common context stores, conversation logs, and state checkpoints.

- Address failure modes like feedback loops, reward hacking, and over-reliance on stale models.

Outcome: aligned teams that improve decision speed while preserving traceability and recoverability.

DATAFOREST as a Fix for AI Agent Collaboration Headaches

DATAFOREST can anchor AI agent collaboration in clean, well-governed data pipelines, eliminating noisy inputs, schema drift, and trigger inconsistencies. It builds interpretability layers—logging, feature provenance, alert triggers—for humans to audit agent outputs before commitment. The team modularizes agents by domain and composes them with orchestrators, avoiding monolithic agents that overreach. We integrate human feedback loops—correction, rewards, overrides—to let agents learn and adapt with control. The result is human-agent systems that remain robust at scale, with predictable latency, traceable lineages, and safe drift control. Please complete the form to collaborate with the AI agent collaboration.

Questions About AI with Team Collaboration

What is collaborative AI?

Collaborative AI shares control over decisions instead of just following orders. The AI agent collaboration system interprets incomplete input, flags conflicts, and proposes alternatives when your request doesn't match the data. It maintains context across sessions and tells you when it's operating outside reliable bounds.

Which industries are currently leading in multi-agent AI team collaboration?

Finance, AdTech, and e-commerce lead because they have high transaction volumes and clear KPIs. These sectors already run automated workflows that agent-to-agent communication agents can plug into. Regulated environments within them push for strong observability and controls.

How do AI team collaboration initiatives integrate with existing digital transformation initiatives?

Start by mapping current workflows and control points. Integrate agents incrementally where they reduce toil or speed decisions. Maintain the transformation roadmap and ensure alignment of AI agent collaboration SLAs with business SLAs.

What is the role of data infrastructure in enabling effective AI agent collaboration?

Data infrastructure supplies the single source of truth agents need. It enforces lineage, schema contracts, and access controls that prevent silent drift. Without it, agents amplify inconsistencies and create audit gaps.

What governance models work best for managing autonomous collaborative AI systems in enterprises?

A risk-tiered governance model is pragmatic: stricter controls for high-impact agents. Combine automated guardrails with human-in-the-loop escalation for ambiguous outcomes. Embed continuous monitoring and clear ownership for each AI agent collaboration.

What are the security implications of allowing collaborative AI agents to share data autonomously?

Autonomous sharing increases the blast radius for breaches and data leakage. You must enforce least privilege, encryption, and signed provenance on every exchange. Also monitor transfer patterns for anomalous or out-of-policy flows.

Can collaborative AI reduce operational costs without significant infrastructure changes?

When you target high-toil tasks, consider using lightweight integration points like APIs and event streams. Gains stem from reduced human editing and faster throughput, rather than from wholesale replatforming. Still, expect modest investments in observability and access controls.

.svg)

.webp)

.webp)