Hire Expert Databricks Developers for Processing Without Breaks

Suppose you hire Databricks developers, and the data processing that used to take eight hours finishes in forty minutes. It means analysts can answer questions the same day instead of the same week. Cloud bills drop because queries stop scanning entire datasets when they only need recent records. DATAFOREST has spent 18 years building data systems, so our engineers already know what breaks.

PARTNER

PARTNER

FEATURED IN

01

Dedicated Databricks Engineer (Full-Time)

- Works only with pipelines, delta tables, lineage, and compliance.

- Job rewriting, CDC data processing, management, and monitoring.

- Monthly subscription with flexible scaling when you hire Databricks developers this way.

02

Team Extension /Squad Model (2–5 Engineers + TL/PM)

- Comprehensive migration from SQL to the Databricks analytics platform.

- Rewriting legacy scripts into automated tasks.

- Large-scale Databricks lakehouse architecture creation—a multi-layered approach to creating a single source of truth.

- Implementation of dashboards, CDC data ingestion, and Databricks cost optimization.

- Ideal for complex, undocumented environments with multiple data sources when companies hire Databricks developers as a complete squad.

03

Fixed-Scope Databricks Project Delivery

- Predictable timelines and budgets for completion.

- Guaranteed deployment of the Medallion architecture.

- Production-ready tasks, Databricks pipeline development, monitoring, and dashboards

- Suitable for clear data migration or transformation stages that hire Databricks developers under fixed terms.

04

POC Development (2–4 Weeks)

- Databricks feasibility check before migration.

- Creating the first bronze → silver → gold data flow.

- Demonstrating computing resource savings and lineage transparency.

- A low-risk starting point for enterprise migration when you hire Databricks developers for proof-of-concept work.

01

100+ software engineers

A large workbench means you don’t have to wait three months to staff a project when you hire Databricks developers from us. When one person leaves or gets sick, someone else takes over without having to start from scratch.

02

18+ years of data engineering experience

Problems recur across industries. This long experience means the team you hire Databricks developers has seen most failure modes and knows which cuts create technical debt and which save weeks.

03

92% customer retention rate

Service providers lose half of their customers every year. This number speaks to fewer surprises, realistic deadlines, and code that doesn’t break two weeks after handover when clients hire Databricks developers here.

04

37+ AI solutions implemented

AI projects fail more often than they succeed. Implementing so many solutions means understanding where models add value and where simpler logic works better, and how to deploy them without constant babysitting once you hire Databricks developers with this track record.

Databricks Engineering Solutions

Your data architecture should be a single, high-performance Lakehouse platform. We offer comprehensive Databricks consulting services to modernize your data platform, automate complex processes, and unlock real-time analytics and machine learning capabilities when you hire Databricks developers from DATAFOREST.

What a Databricks Engineer Fixes in Real Projects

Data operations slow down when legacy systems break under real-world workloads. A Databricks engineer steps in, stabilizes the stack, and takes control of the platform.

Legacy data systems that don’t scale

- Move workloads to Lakehouse with a clear separation of batches and threads.

- Use autoscaling clusters that match real-world workloads.

- Break long tasks into smaller chunks that complete on time.

Manual SQL scripts and a lack of automation

- Move logic into notebooks or workflows with version control.

- Add tests for each step.

- Set up scheduled tasks with alerts on failed runs.

Lack of data governance, provenance, and tracking

- Enable Unity Catalog for cataloging and access rules.

- Track every table and task with built-in provenance.

- Add quality checks on reads and writes.

Compliance, security, and access risks

- Lock down sensitive tables with table-level rules.

- Mask fields containing personal or business data.

- Log every read and write for audits.

Multiple unrelated data sources without a single platform

- Establish connectors for SaaS tools, applications, and repositories.

- Place all sources in a single Lakehouse zone.

- Standardize schemas so teams can read data in the same way.

Unreliable pipelines and undocumented logic

- Refactor tasks with clear steps and up-to-date libraries.

- Add edge-case tests.

- Track task execution with alerts related to real errors.

Inefficient architecture increases compute costs

- Choose small cluster types for light workloads and autoscale for heavy workloads.

- Cache only what teams need.

- Remove obsolete tables and unused storage.

Business intelligence, AI, and advanced analytics don’t scale

- Create clean tables for dashboards.

- Create curated sets for machine learning and mastering.

- Add checks that block bad records before they spread.

.svg)

↓ Reduce pipeline failures and data downtime by 50-70%

Engineers rebuild legacy SQL jobs into reliable Databricks ETL development pipelines. These pipelines retry on errors, send alerts, and never fail silently.

.svg)

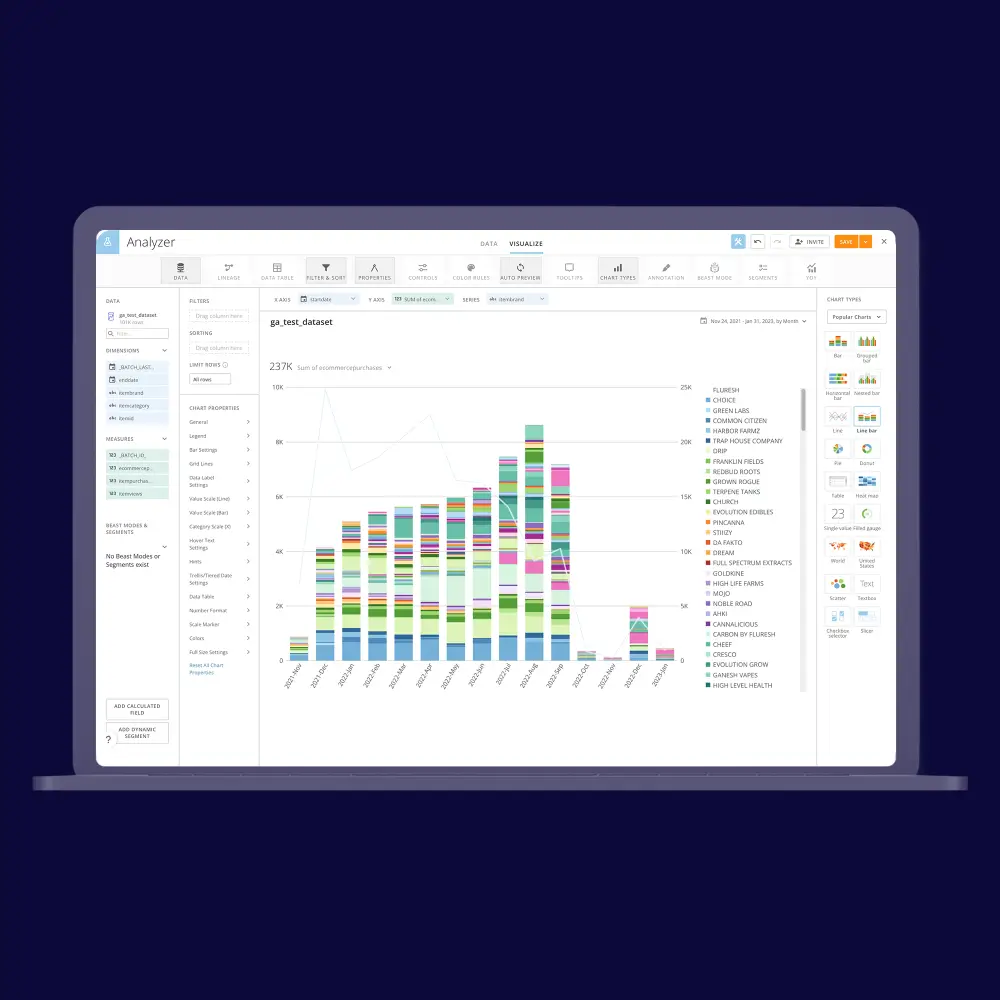

10-20x faster analytics and dashboard refreshes

Teams optimize delta tables and add intelligent caching. Dashboards that once took hours now refresh in minutes or seconds.

Unified data from 10+ disparate systems in a single Lakehouse

Engineers collect data from SaaS tools, databases, and APIs in one place. They organize it into bronze, silver, and gold tiers for clean, scalable operations.

Trusted provenance, governance, and full visibility into data quality

Automated auditing records every change and validates data quality. Teams know exactly where data is coming from and trust what they’re using.

Faster insights with self-contained SQL and BI connectivity

Business users get fast SQL stores and easy-to-understand data models. They create their own reports and don't have to wait for engineers to review them.

Steps To Hire Expert Databricks Developers

We follow a strict path to find the right data experts for your team.

Define Project Needs

We review your data setup to understand your needs. We then list the exact skills required for the job.

01

Verify Credentials

We pick candidates with proven Databricks certificates. We check their past work to confirm they have real experience.

02

Test Technical Skills

Candidates solve coding problems during a live session. They explain their choices to demonstrate their knowledge of the tools.

03

Begin Work

We set up accounts and permissions for the new hire. The engineer joins your team and starts the first task.

04

Track Progress

You receive a report every week on the work completed. We also check cloud usage to keep your budget low.

05

Articles That Encourage Hiring Databricks Developers

All publicationsQuestions to Ask Before You Hire a Databricks Engineer

How can I reduce cloud computing costs during peak usage?

We audit your data goals and current systems. This shows whether Databricks meets your scalability requirements. We only recommend the platform if it solves real problems for you.

Can your engineers work with our existing Databricks configuration, or only with new projects?

Our engineers often join existing environments. We audit your current setup and data structure. Then we increase speed and add features to your active projects.

Do you handle migrations from legacy systems (Hadoop, Azure SQL, Redshift, Snowflake, on-premises systems)?

We manage migrations from many systems. We move data from Hadoop, Redshift, and on-premises servers. We plan the migrations to prevent downtime and protect your data.

Can you integrate Databricks with our existing tools, SaaS systems, and cloud services?

We build custom connectors for your current tools. We connect Databricks to your SaaS applications and cloud services. Your data flows between all the systems you need.

Do you provide end-to-end data and machine learning/LLM support on Databricks?

We manage the entire process from data ingest to deployment. We build pipelines, configure function stores, and train models. We manage all engineering and machine learning tasks.

Can your team build and automate pipelines, dashboards, and business logic workflows in Databricks?

We design and automate data pipelines. We build dashboards using Power BI or Tableau. We turn complex business rules into automated workflows on the platform.

Do you offer ongoing support for monitoring, cost optimization, and incremental improvements?

We provide operational support after the project is complete. You get monitoring, troubleshooting, and cost reduction. We also deliver updates and features when you need them.

How experienced are your engineers with Databricks best practices (Delta Lake, Unity Catalog, Medallion Architecture, MLflow)?

Our engineers follow the official Databricks best practices in their work. We build all projects using the Delta Lake format and the Medallion Architecture template. We rely on Unity Catalog for data management and use MLflow for model tracking.

How do you manage documentation, adoption, and knowledge transfer to our team?

We clearly document all code, architecture, and deployment procedures. Our team conducts hands-on workshops for your internal staff. This process transfers the full breadth of operational knowledge to your team members.

Can you help us improve governance, security, and compliance (HIPAA, SOC2, GDPR)?

When you hire Databricks developers, we set strict data governance standards with Unity Catalog. We configure security settings according to specific regulations such as HIPAA or GDPR. This improves your compliance posture and protects sensitive information.

How quickly can your Databricks engineer get started, and how long does onboarding take?

We aim to have an engineer assigned within one to two weeks of signing the contract. The initial onboarding process typically takes one day after granting access. The engineer can start delivering value very quickly after that first day.

Can you estimate the timeline and cost of our migration or modernization project?

We provide a clear estimate after completing a short research phase. During this phase, we analyze your data volume, complexity, and specific requirements to determine the best approach. Following this analysis, clients who hire Databricks developers receive a detailed quote with a fixed timeline and cost.

How do you control costs and prevent overspending on Databricks compute resources?

We manage cluster settings to avoid unnecessary resource usage. We implement autoscaling policies to use compute power only when needed. This approach controls your Databricks costs and prevents overspending.

How do you ensure data quality, reliability, and pipeline observability?

If you hire Databricks developers, we implement data quality checks at every stage of pipeline development. We design the architecture for high reliability and fault tolerance. We integrate logging and monitoring tools to maintain high pipeline observability.

Let’s discuss your project

Share project details, like scope or challenges. We'll review and follow up with next steps.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.webp)

.svg)