How a U.S. Data Intelligence Firm Automated Google Maps Data Collection

We built a custom Google Maps scraping solution that allows the client - a U.S.-based data intelligence and marketing advisory firm, to independently collect publicly available business data across the U.S. The system performs targeted company searches, identifies relevant listings and URLs, and processes the data through a structured pipeline for cleaning, normalization, and delivery. This approach gave the client full control over data freshness, structure, and scalability.

60–70

%

business coverage achieved across targeted U.S. regions and categories

.webp)

IDM is a U.S.-based data intelligence and marketing advisory firm with over 20 years of experience in developing account-based marketing strategies. The company works with large enterprises, including Fortune 500 organizations, delivering custom data solutions that support advanced analytics, precise market segmentation, and data-driven marketing decision-making.

Aiohttp

Lxml & Regex

Geopy

PostgreSQL

Loguru

THE CHALLENGE

Building a Scalable and Cost-Efficient Way to Enrich U.S. Business Data with Reliable, Up-to-Date Attributes

The client relied on third-party data providers but received incomplete business records with a very limited set of parameters. To gain more control and data depth, they decided to collect and manage the data themselves. Manual enrichment proved slow and expensive, while the business required regularly updated, detailed datasets at scale to support marketing insights,and consulting recommendations for U.S. companies.

THE SOLUTION

Automated Google Maps Data Collection and Enrichment Pipeline

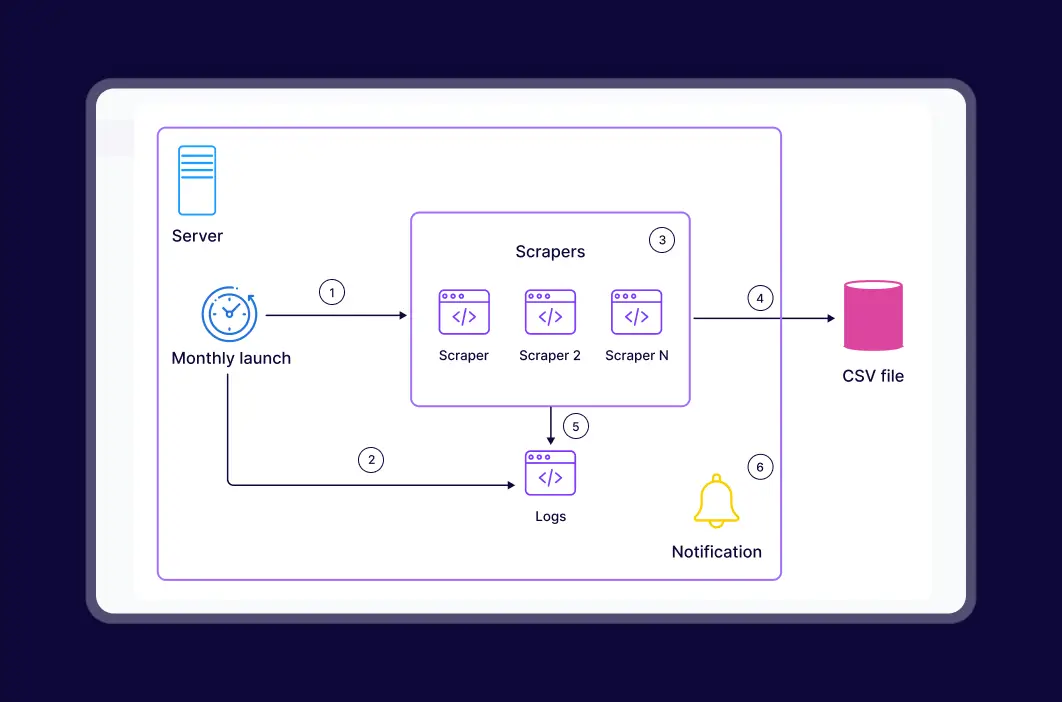

We built an automated Google Maps–based data collection pipeline designed to operate at national scale.

The solution performs targeted company name searches via Google Maps, identifying and collecting relevant business listings and URLs across the United States. This stage focuses on accurate discovery and coverage, deliberately excluding the parsing or storage of extended Google attributes to ensure speed, stability, and controlled data scope.

.svg)

Scalable Scraping and Data Processing Architecture

The system uses an automated scraper with geo-based search, category targeting, and anti-ban mechanisms. Raw data is cleaned, deduplicated, and standardized before being stored in PostgreSQL, ensuring high data quality and consistent business attributes at scale.

.svg)

Automated Delivery, Updates, and Client-Ready Data Exports

The pipeline includes automated CSV exports and scheduled monthly updates, eliminating manual effort. End-to-end testing, monitoring, and documentation ensure reliable operation, transparent results, and a cost-efficient alternative to traditional data providers.

THE RESULT

From Costly Data Providers to 60–70% Market Coverage with a Self-Updating Business Intelligence Pipeline

The automated pipeline enabled the client to independently collect and refresh U.S. business data at scale, achieving 60–70% coverage of targeted businesses via Google Maps. Compared to third-party providers, the solution significantly increased data depth and freshness while eliminating manual verification costs. Monthly automated updates replaced slow, expensive enrichment workflows, allowing IDM to deliver more accurate segmentation, faster marketing insights, and higher-quality consulting recommendations using consistently up-to-date datasets

60-70%

business coverage achieved across targeted U.S. regions and categories

Automated Google Maps Data Collection for a U.S. Intelligence Firm

Steps of providing data scraping services

Latest publications

All publicationsLatest publications

All publicationsWe’d love to hear from you

Share project details, like scope or challenges. We'll review and follow up with next steps.

.webp)