A mid-size fintech firm had fraud detection locked in batch processing—12-hour latency made it useless. They integrated GPT-4 via the OpenAI real-time API into their transaction pipeline, cutting detection lag to milliseconds without hiring ML engineers. Within six weeks, they reduced false positives by 40% and shipped to production; the alternative path (building in-house) would have taken eighteen months. Book a call to stay ahead in AI technology.

How Does Enterprise AI Move from Prototype to Measurable Revenue?

McKinsey states that employees are already prepared to use enterprise AI, but organizational leadership and integration of AI strategy lag behind, making executive readiness and operational adoption the real bottlenecks.

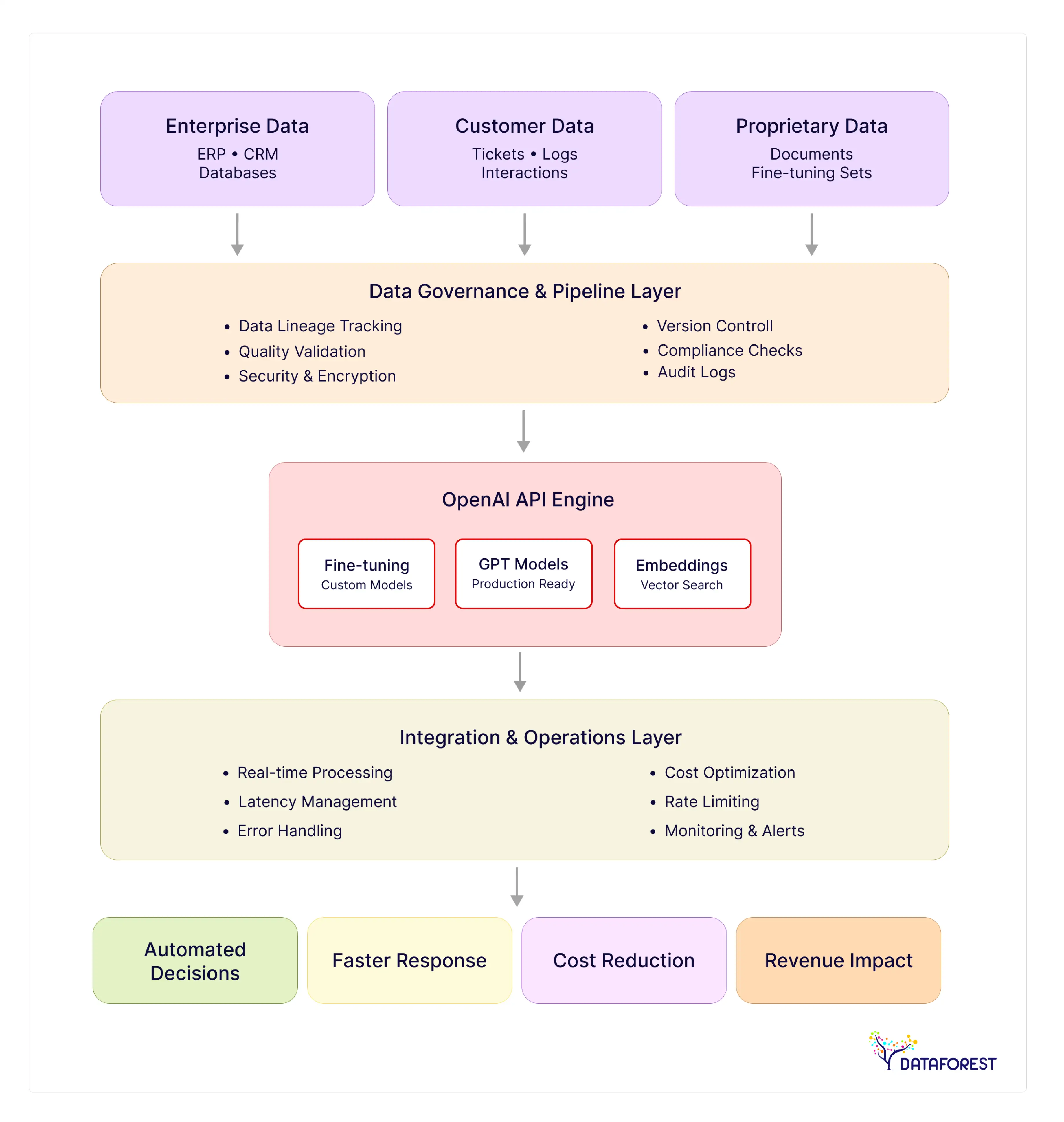

Enterprises start with a pilot. A team spins up a GPT integration, gets decent results in a sandbox, then hits a wall: production demands latency guarantees, audit trails, and cost controls. The OpenAI integration becomes the bridge—it doesn't require cloud infrastructure you can't yet justify. You can measure business impact and perform ROI measurement before committing to custom model training or MLOps investments, which represents a fundamental shift. Proof-of-concept stops being a dead-end phase and becomes a pathway to revenue. You deploy incrementally, track metrics that matter to finance, and scale only when the numbers justify it. The friction between lab and production drops dramatically.

Before OpenAI API integration access, enterprises either built models in-house (slow, expensive, risky) or licensed heavyweight SaaS platforms (locked into vendor logic). The API split the difference. You get production-grade language model capabilities without owning the infrastructure or hiring a hundred machine learning PhDs. OpenAI integration happens in weeks, not quarters. Your data engineers own the data pipeline and data architecture logic—where reliability actually lives. OpenAI handles fine-tuning models, drift, and predictive analytics; you focus on data quality and business logic. That's the game shift: control stays local, complexity moves upstream.

What Separates Enterprise AI from Hobby Projects?

The OpenAI API integration is more than just a model endpoint. It enables enterprise automation and intelligent automation at production scale without melting your cloud infrastructure. It must withstand audits, regulations, and your security team's skepticism. It needs to work on your data, not generic datasets.

Handling Scale Without Breaking

Your traffic spikes. Black Friday hits. Fraud detection runs in real-time across millions of transactions. The API integration scales because OpenAI manages the underlying compute—you don't manage capacity planning. Rate limits are generous enough for an enterprise workload. Latency stays predictable under load; you get SLAs in writing. Your pipeline doesn't degrade when demand doubles. You measure token usage per request, per team, per application. Cost becomes a predictable input to your business intelligence model.

Staying Inside the Guardrails

Enterprise buyers have compliance officers. HIPAA, SOC 2, GDPR—these aren't optional. OpenAI maintains certifications that your legal team actually recognizes. Compliance management and data governance policies ensure data retention practices you can audit. OpenAI API integration calls log cleanly for forensic review. Fine-tuning keeps your proprietary data inside your tenancy, not pooled with competitors. Your security team gets specific answers:

- Where does data live?

- How long does it stay?

- Who can access it?

The OpenAI API answers all of them.

Making It Work on Your Specifics

Generic models drift on your domain. Fine-tuning models lets you inject your data patterns, terminology, and edge cases. You control what trains on what. Output improves because the model learns your actual constraints. Prompt engineering gets you 70% of the way; fine-tuning closes the remaining gap. You don't need to retrain from scratch. You don't need a research team. Your data engineers build the training pipeline—the model adapts. This is the heart of enterprise innovation.

Where Does Enterprise AI Solve Real Problems?

AI pilots fail because they solve the wrong thing. You need to know where the OpenAI API integration actually moves the needle—where it cuts labor costs, where it stops customers from leaving, where it unlocks decisions stuck in manual review. The OpenAI API use cases below aren’t aspirational—they’re proven scalable AI solutions. They're the ones already working.

Automating Knowledge Work

Your team spends four hours daily digging through documents, extracting data, and flagging exceptions. The OpenAI integration supports document automation, reading contracts, pulling structured data, and flagging risk patterns. Your humans move from data extraction to judgment calls. Contract review accelerates by 10x. Your legal team focuses on negotiation, not pattern matching. Cost per review drops measurably.

Fixing Customer Friction

Support tickets pile up. Response time kills retention. The OpenAI API integration handles tier-1 routing instantly—classifies intent, pulls relevant docs, and suggests responses. Combined with chatbot development and workflow automation, your support team handles actual problems, not FAQ repetition. First-response satisfaction climbs. Escalation time compresses. You measure this in retention curves and revenue per customer.

Turning Data into Action

Your data warehouse has answers nobody accesses. SQL queries sit unwritten because half your team doesn't speak SQL. The OpenAI API integration translates English questions into working queries powered by natural language processing and data analytics. Your ops team stops waiting on analytics bottlenecks. Decision cycles shrink from weeks to hours. Someone asks a question, receives an answer, and then acts on it using real-time insights.

Building Content at Scale

Your marketing team writes three product descriptions daily. The OpenAI API integration generates fifty variations using generative AI. Personalization scales without hiring copywriters—your team edits and ships, not creates from zero. A/B test cycles accelerate. Content goes live in days. Attribution shows which approaches convert.

The Question About OpenAI API

What Changes When Enterprises Deploy AI at Scale?

Speed matters, but only if you don't break things. Cost drops, but mostly because your team stops doing repetitive work—not because some algorithm got clever. Better decisions come from having answers faster, not from AI making choices for you.

Shipping Faster Means Fewer Project Delays

- Six weeks to production instead of eighteen months eliminates the "waiting for infrastructure" tax.

- Your data team deploys OpenAI API integration logic, not model training pipelines.

- You measure real business impact before committing capital.

- Iteration cycles compress. You fix problems in production, not in planning meetings.

- Competitors that are still building in-house are still hiring ML engineers.

Labor Hours Move from Repetitive Work to Judgment

- Contract review shifts from extraction to negotiation.

- Your support team handles actual problems.

- Analysts write strategy, not SQL boilerplate.

- Payroll doesn't spike—existing headcount does different work.

- You measure this in throughput per person, not in headcount reduction.

Decisions Happen When Data Matters

- OpenAI real-time API integration for fraud detection catches patterns your batch process missed yesterday.

- Customer support answers questions in seconds instead of escalation queues.

- Operations teams ask questions and get answers, rather than waiting for analytics bottlenecks.

- You compete on reaction speed, not on who has the biggest data warehouse.

- Stale data stops killing your margin.

What Stops Enterprises from Using OpenAI API Integration?

You deploy the OpenAI API integration and immediately hit friction nobody mentions in demos. Your compliance team wants audit trails you don't have. Your engineers are unable to explain why the model behaved that way on that specific input. Your data pipeline works until it doesn't, and nobody knows what broke first.

Data Governance and Model Transparency

Challenge: Your compliance team asks a simple question: Where does our data live after fine-tuning? You can't answer it cleanly. The OpenAI API integration logs requests, but the logs don't connect to your audit trails. When the model behaves unexpectedly in production, you see the metric change but can't trace it back to what input caused it. Model outputs shift over time—drift happens invisibly. Your legal team wants proof that proprietary data doesn't leak into shared infrastructure. Without lineage tracking, you're operating blind.

Solution: Build explicit data pipelines that capture every step. Log each OpenAI API integration call with input, output, latency, and cost. Version your prompts like code. Store fine-tuning datasets in your own infrastructure, not someone else's cloud. Track model performance metrics per use case so you detect drift before it breaks your business logic. When something fails, reconstruct what happened—not guess. Make lineage visible: this input came from source X, passed through transformation Y, and returned result Z.

Tools:

- Data observability platforms track the whole pipeline.

- Logging infrastructure captures request-response cycles with context.

- Version control systems store prompt iterations.

- Cost allocation tools map tokens back to business units.

- Monitoring dashboards surface OpenAI API integration response times and failure patterns.

Result: Your compliance team gets audit trails they can defend. Engineers debug production issues instead of guessing. Model behavior becomes explicable—not mysterious. You stop operating in darkness.

Integration Complexity

Challenge: The OpenAI API works in isolation. Drop it into your production pipeline, and latency expectations break. Your ETL jobs are timing out while waiting for responses. Rate limits don't match your batch windows. Error handling cascades occur when an OpenAI API failure floods retry logic, triggering another rate limit hit that breaks downstream processes.

Solution: Design retry logic and circuit breakers before you ship. Decouple your systems from OpenAI API integration availability using message queues. Separate batch workflows from real-time flows—they need different timeout windows and error strategies. Cache aggressively. Test latency under load, not demo conditions.

Tools:

- Message queues buffer requests.

- Caching layers absorb repeated queries.

- Load testing frameworks expose breaking points before they are encountered in production.

- Monitoring tracks API response time and failure patterns per endpoint.

Result: Your pipeline stays stable when the OpenAI API hiccups. Integration doesn't require heroic engineering. Cost remains predictable because you're not blindly retrying against rate limits.

Talent and Change Management

Challenge: Your data team knows SQL and Spark, not prompt engineering. ML engineers built models in TensorFlow—now they're debugging why a prompt returns inconsistent output. Nobody owns the OpenAI API integration. It's too simple for ML, too strange for data teams. Skills atrophy fast without sustained use.

Solution: Assign clear ownership. Data engineers own the pipeline for OpenAI API integration. Someone dedicated handles prompt versioning and evaluation. Run workshops that make prompt engineering concrete. Start small—document what actually works. Measure output quality so the team sees impact, not just shipping.

Tools:

- Prompt evaluation frameworks catch regressions.

- Internal documentation captures learned patterns.

- Testing harnesses detect prompt drift over time.

- Version control stores prompt iterations alongside code.

Result: Your team owns the system now. Skills stick because people ship real work. Onboarding becomes faster. You stop treating AI integration like a one-time project.

How Do You Scale AI Without Breaking Your Pipeline?

Teams pilot successfully, then hit a wall. The prototype worked because the data was clean and the load was light. Production is different—schema drifts, volume spikes, your assumptions crack. You need a foundation that survives those pressures.

If you are interested in OpenAI API integration, please arrange a call.

Where Does Enterprise AI Go from Here?

Models keep getting better, but that's no longer the bottleneck. Fundamental constraint is operational—how you run these systems reliably at scale. Enterprises still treat AI as a project, not infrastructure. That ends when retraining becomes routine. Cost optimization happens next. Vendors will compete on latency and per-token efficiency, not raw capability. Your competitive edge shifts from "we have an AI model" to "our pipeline stays stable under load." The next three years belong to whoever builds observability and risk mitigation first—who knows what their model learned and why it failed.

Scalable AI with OpenAI + DATAFOREST

DATAFOREST sits at the crossroad of data engineering, generative AI, and software development. We develop custom enterprise automation modules—such as copilots, knowledge-base agents, and RAG systems—that leverage OpenAI integration for reasoning, summarization, and knowledge management. With this setup, companies can focus solely on domain logic and workflows, trusting DATAFOREST to orchestrate OpenAI API integration, model selection, and AI strategy optimization. The team further wraps critical production features—monitoring, logging, error handling, fallback logic, rate limiting, and cost controls—around OpenAI integration calls to ensure reliability and compliance management. Beyond technical integration, DATAFOREST consults on use-case prioritization and digital transformation strategy, guiding clients to pick high-leverage domains and deploy incrementally. In doing so, enterprises get scalable AI solutions built on Azure OpenAI models, without overburdening their internal engineering teams.

Please complete the form to increase the number of OpenAI API integration use cases.

Questions on OpenAI API Integration for Enterprise

What are the hidden costs enterprises often overlook when integrating AI APIs into existing systems?

Token costs explode when you retry failed requests blindly. Most teams forget to budget observability—logging, monitoring, tracing each OpenAI API integration call adds infrastructure cost they didn't anticipate. Latency SLAs drive architecture decisions; building queues and caches to meet them costs more than the OpenAI API integration itself.

How can companies ensure their proprietary data remains secure when interacting with external AI APIs?

Fine-tune your infrastructure, not the vendor's cloud. Log every request with encryption in transit and at rest. Your compliance team needs to audit data retention policies— how long does the OpenAI API integration store your inputs? Get specifics in writing.

What governance policies should be established before rolling out AI tools across departments?

Define who is responsible for cost allocation before departments start burning tokens. Establish precise lineage requirements—what data goes in, what outputs matter, who validates them. Set approval gates for new OpenAI API integration use cases. Without policies, drift happens fast.

What are the best metrics for tracking the business impact of AI-driven automation?

Measure throughput per person, not just cost savings. Track error rates and false positives that escape to customers. Monitor latency improvements against baseline manual workflows. Revenue per transaction matters more than raw OpenAI API integration usage.

How can OpenAI-based automation tools integrate with ERP or CRM systems in large organizations?

Build OpenAI API integration at the data pipeline layer, not the UI layer. Queue OpenAI API requests to prevent pipeline failures from cascading through your ERP. Version your prompts like schema migrations—test changes before deploying to production. Your middleware owns retry logic and error handling.

What steps should an enterprise take to prepare its data infrastructure for scalable AI adoption?

Audit your schema first. Fix broken data sources before fine-tuning—garbage in, garbage out still applies. Build a logging infrastructure that captures OpenAI API integration calls and correlates them to business outcomes. Clean lineage matters more than raw data volume.

How can enterprises leverage OpenAI API to drive both industrial automation and IT modernization simultaneously?

Industrial automation gains speed when AI handles predictive maintenance—API processes sensor data, flags equipment failures before they happen, reducing downtime and labor inspection cycles. IT modernization accelerates when the same API infrastructure automates legacy system documentation, code analysis, and infrastructure decisions, allowing your team to stop maintaining brittle manual processes. The real win: one API investment unlocks both manufacturing efficiency and infrastructure agility, thereby compressing timelines that typically operate on parallel tracks.

.svg)

.webp)

.webp)