A SaaS company spent two years building custom large language models for enterprises before realizing they were competing in one tier with another tier's resources—burning capital on training infrastructure while their actual advantage lived in domain-specific workflows. They pivoted to fine-tuning open models and rebuilt around what they could control: customer data pipelines, integration logic, and deployment speed. Revenue recovered because they stopped fighting a war they couldn't win and started winning the one that mattered. Book a call, get advice from DATAFOREST, and move in the right direction with a clear understanding of the three types of AI and AI market segmentation.

Where Does Your AI Investment Belong?

Companies don't fail at artificial intelligence adoption because the technology is bad. They fail because they're playing the wrong game at the wrong tier. Understanding where the real leverage sits—and where it doesn't—determines whether AI becomes a driver of business transformation with AI or a budget sinkhole.

AI Will Either Scale Your Business or Drain It

Revenue growth tied to AI implementation in business isn't guaranteed just because the technology exists. Companies that scaled successfully treated AI as infrastructure for AI-powered decision making, not as a product feature to advertise. They automated high-volume processes, reduced cycle times, and freed up human capacity for judgment calls that machines can't handle. The ones that failed bought tools without changing how workflows work. Enterprise AI strategy doesn't fix broken operations—it amplifies them. Recognizing which of the three business AI tiers aligns with your operations is key.

When AI Spending Becomes a Strategic Mistake

Misaligned investment occurs when companies confuse their own capabilities with those of a higher tier among the three AI markets. A mid-sized firm investing in custom LLM training is competing with organizations that have 100 times the budget and specialized talent. The risk isn't just wasted money—it's opportunity cost. While capital burns on infrastructure that won't deliver, competitors using off-the-shelf models move faster. Strategic mistakes come from confusing ambition with capability.

Key misalignments include:

- Building foundational models without the talent or data moat to sustain them.

- Buying compute infrastructure instead of renting it when scale is uncertain.

- Chasing differentiation in layers where commoditization has already happened.

- Ignoring integration complexity in favor of shiny new tools.

Cutting Through the Noise to Find What Works

Hype creates urgency. Strategy requires patience. Filtering what matters means ignoring what venture capital celebrates and focusing on what operationally makes sense. Does the tool reduce decision latency? Does it remove a bottleneck that humans can't scale past? Does it integrate with existing workflows without requiring a platform overhaul? If the answer is no, it doesn't matter how impressive the demo looks. Real strategy starts with admitting what problems actually need solving— and which of the three types of AI holds the solution.

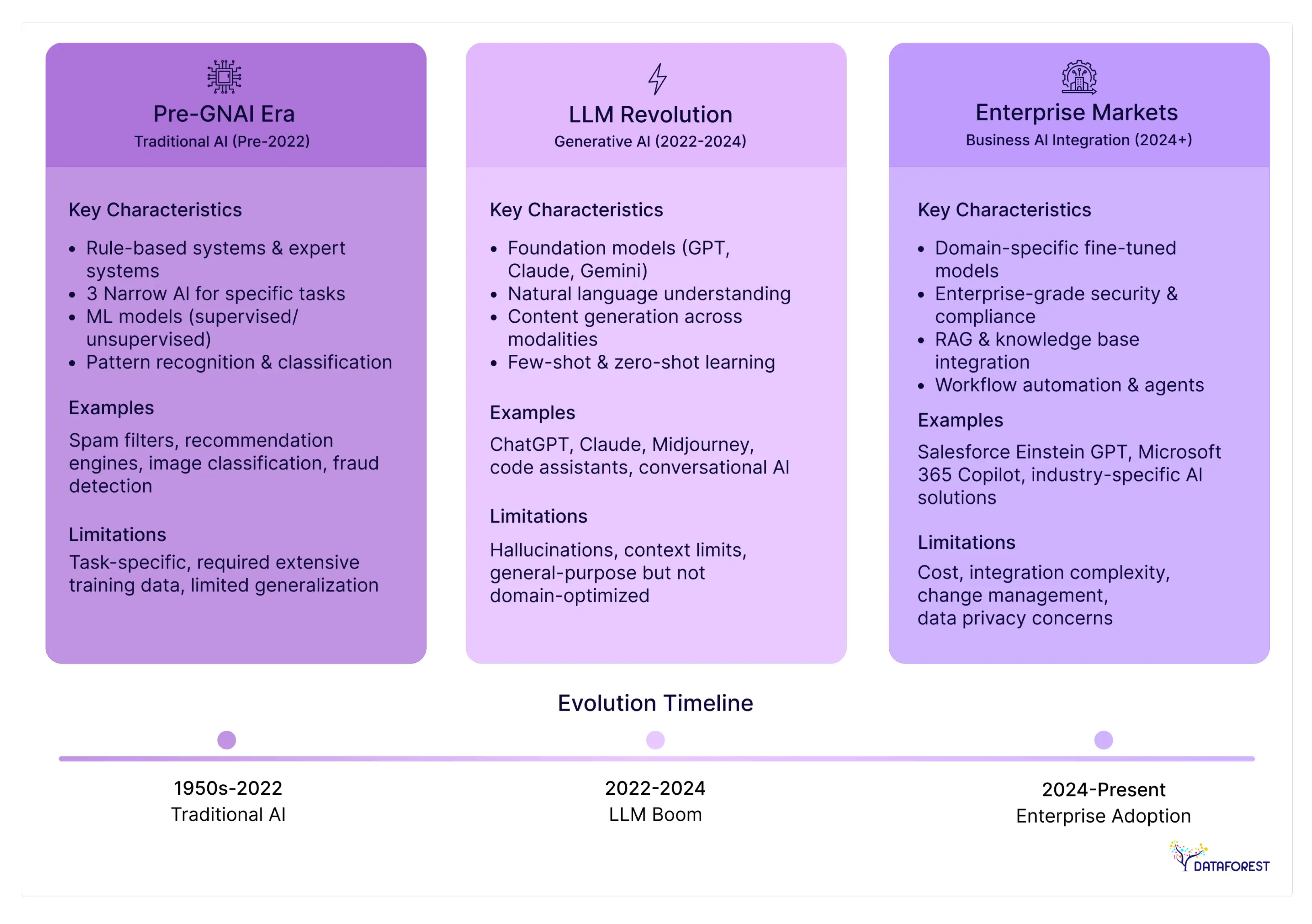

Tier 1— Pre-Generalized Generative AI

Pre-generalized generative AI focuses on the physical and computational foundations that enable machine learning for enterprises, forming the first layer among the three types of AI.

The Foundation Layer Most Companies Never See

Pre-generalized AI encompasses everything that occurs before generative models are introduced, including data pipelines, compute infrastructure, storage systems, and narrow pre-GNAI applications such as recommendation engines or fraud detection. These tools don't create new content—they classify, predict, or optimize based on historical patterns. Enterprises rarely build this layer from scratch; they rent it from cloud providers or use vendor solutions designed for specific tasks within the three types of AI hierarchy.

Where Pre-GNAI Delivers Measurable Results

Fraud detection systems flag suspicious transactions faster than human review teams ever could. Demand forecasting models reduce inventory waste by predicting stockouts before they happen. Recommendation engines drive conversion by surfacing products customers didn't know they wanted. These applications work because the problems are narrow, the data is structured, and the stakes are well-defined. Success here doesn't require breakthrough technology— just consistent execution in the first of the three types of AI.

Why Enterprises Can't Rely on This Tier Alone

Pre-GNAI solves problems within boundaries it already knows. It can't handle ambiguity, adapt to unstructured inputs, or generate novel outputs. When markets shift or edge cases arise, these systems break silently: they continue running but cease to be useful. Relying entirely on this tier means trading flexibility for stability. That works until the environment changes faster than the models can retrain—one of the core challenges of AI adoption highlighted across the three types of AI.

Tier 2—Training Large Language Models

Training LLMs requires capital, talent, and infrastructure that most enterprises don't have. The question isn't whether the technology works—it's whether the investment makes sense for what you're trying to control.

What LLMs Change About How Work Gets Done

LLMs handle unstructured inputs that older systems can't touch. They draft reports, synthesize customer feedback, and answer questions without needing pre-programmed rules. This shifts work from execution to review—humans check output instead of creating it from scratch. That compression matters when volume exceeds human capacity, but quality still needs oversight.

Why Some Companies Bet on Training Their Own

Proprietary models promise control over differentiation and data privacy. If competitors use the same off-the-shelf model, training your own creates separation—at least in theory. Some industries have compliance constraints that make third-party APIs unusable. But the bet only pays off if the model delivers something that generic options can't, and if the organization can sustain ongoing retraining costs. Understanding this second tier within the three types of AI helps avoid costly missteps.

Key reasons enterprises pursue custom training:

- Specialized domain knowledge is not present in public models.

- Regulatory requirements that block external API usage.

- Belief that proprietary data creates a defensible moat.

- Need for uptime without vendor dependency.

Where the Model Breaks Down

LLMs hallucinate sometimes when generating confident answers to questions they don't understand. Many LLM adoption efforts in business fail to scale due to missing feedback loops. Costs scale unpredictably—compute bills spike during retraining, and talent with deep ML expertise is scarce and expensive. The model might work in demos, but it fails when edge cases multiply faster than the team can patch them.

Tier 3— Enterprise AI Markets

Tier three is where enterprise AI solutions are. Models stop being impressive demos and start breaking against workflows that weren't designed for them.

When Off-the-Shelf Becomes Enterprise Infrastructure

Point tools evolve into enterprise-grade AI platforms when scaled across thousands of users. A chatbot used by three people is a toy. The same tool, serving 10,000 employees, becomes infrastructure—it needs permissions, audit trails, and rollback capabilities. Vendors who understand this gap build for governance from the start, which is critical in the third tier of the three types of AI. Vendors that design for governance thrive in the enterprise AI market trends, while those who don’t get dropped during procurement.

AI Meets the Work That Pays the Bills

- Healthcare providers utilize AI to convert physician dictations into structured clinical notes, reducing documentation time without compromising accuracy.

- Legal teams deploy contract analysis tools that scan M&A documents for liability clauses faster than associates can read them.

- Financial institutions operate compliance monitoring systems that flag suspicious transaction patterns before regulators become aware of them.

- Logistics companies optimize delivery routes in real-time, adjusting for weather and traffic without requiring dispatcher intervention.

- Retailers utilize demand forecasting models to minimize overstocking and prevent stockouts, thereby protecting margins without relying on guesswork.

Recognizing where each of the three types of AI applies ensures investments align with real-world enterprise digital transformation.

Why Enterprise-Ready AI Is Different

Enterprise AI must function reliably, as a single mistake can trigger lawsuits or regulatory fines. Consumer tools can fail without consequences, but companies need audit trails, security guarantees, and predictable costs. The real difference lies in integration—most businesses operate on decades of scattered, legacy systems that don't communicate with each other. Reliability and support become contractual obligations with financial penalties, not nice-to-haves. Getting thousands of people to adopt new tools requires thorough documentation, extensive training, and months of committee approvals before anyone can touch the software.

Which AI Tier Fits Your Business?

Some companies cannot distinguish between a language model and a production system. That confusion costs time, budget, and credibility when projects fail to meet expectations. Understanding the three types of AI provides clarity on capabilities and limitations.

What Each Tier Can and Cannot Do

Select what you need and schedule a call.

How Ready Each Tier Is for Real Deployment

Pre-Generalized Systems have been in production for years. Banks use fraud detection models. Retailers run recommendation engines. The technology is stable because the scope is limited. Implementation risk is low if the problem aligns with the system's intended purpose. Maintenance is straightforward until business needs evolve beyond the model's capabilities.

Large Language Models are stable at the foundation layer but unstable at the application layer. The base technology works—GPT, Claude, and similar models reliably process language. The problem is connecting them to company data, workflows, and compliance requirements without creating new risks. Organizations lack the internal expertise to deploy these safely at scale. Pilot projects succeed, but moving to production exposes gaps in security, monitoring, and cost control.

Enterprise Platforms trade cutting-edge performance for predictability. Vendors smooth out the rough edges, add guardrails, and provide support contracts. Deployment timelines stretch longer because procurement, legal, and security teams all need sign-off. However, once implemented, the system comes with someone to call when things go wrong. Maturity varies by vendor and industry; financial services platforms are further along than those in healthcare or manufacturing.

What Returns Look Like at Each Level

Pre-Generalized AI (Narrow / Task-Specific AI)

- Fast ROI—usually within a few months.

- Gains show up in specific, measurable areas:

- Customer service deflection (chatbots, FAQ bots).

- Reduced processing time for standard documents.

- Fewer manual data entry errors.

- Payback is driven by:

- Headcount avoidance.

- Process speed improvements.

- Low ceiling on returns:

- Once the task is automated, performance plateaus.

- To expand ROI, you need separate models for every new use case.

Foundation Model Development

- ROI is unclear unless operating at a considerable scale.

- Upfront investment can reach millions before any return is realized.

- Justification relies on:

- Flexibility—one model serving many downstream tasks.

- Owning core IP instead of licensing models.

- Only practical for:

- Tech giants.

- Government programs.

- Research institutions.

- Payback timeline:

- Measured in years, not quarters.

- Measured in years, not quarters.

- Most companies lack:

- Data volume.

- Infrastructure.

- Talent depth to benefit from custom LLM training.

Integrated Business AI Ecosystems

- Target ROI: 20–40% efficiency gains across knowledge work.

- Value proposition:

- Replace multiple siloed tools with one AI layer or platform.

- Replace multiple siloed tools with one AI layer or platform.

- Reality check:

- Integration costs and timelines are almost always underestimated.

- Adoption and organizational behavior are bottlenecks.

- Payback window:

- 12–18 months post-deployment.

- 12–18 months post-deployment.

- Success depends on:

- Process redesign.

- Actual changes in how employees work.

- Failed deployments happen when:

- Companies purchase the platform but retain their existing workflows.

What Should You Do Before Buying AI?

Companies skip the hard questions until after the contract is signed—by then, misaligned goals and missing infrastructure turn promising pilots into expensive failures.

Start with Problems, Not Technology

Map business objectives to specific pain points before evaluating any AI tool. A roadmap built around "adopting AI" produces shelfware—systems nobody uses because they don't solve real work. Define success metrics that matter to operations, such as cycle time reductions, error rates, and cost per transaction. Technology choices follow from these constraints.

Choose Vendors Who Understand Your Constraints

The right partner admits what their system can't do and where integration will hurt. Beware of demos that show perfect scenarios—ask what breaks, how often, and what happens when it does. Look for vendors serving companies at a similar scale and maturity, facing similar regulatory requirements. References matter more than feature lists; talk to customers six months after deployment, when the novelty has faded.

Fix Data Foundations Before Deployment

AI systems fail when data quality, access controls, or lineage documentation are not in place. Organizations discover these gaps mid-implementation when connecting systems, which surface inconsistent formats, missing permissions, or compliance violations. Building governance frameworks after acquiring the technology results in delays, scope creep, and frustrated teams. Set retention policies, classification standards, and access rules first.

Navigating the Three Tiers of AI with DATAFOREST

DATAFOREST helps enterprises start at the pre-generalized systems tier by cleaning, structuring, and integrating their data for AI data analytics.

At the large language model training tier, we handle data curation, labeling, and pipeline setup, making sure the datasets are clean, traceable, and ready for model fine-tuning or custom training. We also help deploy LLMs into real-world workflows—such as chatbots, copilots, or AI agents—where models actually solve business problems instead of merely sitting in experiments.

At the enterprise platform tier, DATAFOREST builds scalable, secure AI platforms that connect to existing systems, manage costs, and stay maintainable over time.

Throughout all tiers, the team focuses on governance, privacy, and compliance—so innovation doesn’t turn into a legal headache. We also run proofs-of-concept and MVPs to test ROI and reduce risk before full rollout. DATAFOREST guides companies through every stage of AI maturity—from raw data to trained intelligence to enterprise-ready AI platforms, effectively mastering the three types of AI.

Please complete the form to determine the exact tiers of AI your business truly needs.

The Future of AI Belongs to Those Who Orchestrate All Three Markets

Forbes reports, AI is no longer one big market—it’s three tightly linked ones.

- Inference, where models actually run and make decisions in real time.

- Training and infrastructure, which powers the heavy lifting of building and refining those models.

- Enterprise platforms, where AI gets packaged into tools people can actually use.

The future belongs to companies that can orchestrate all three types of AI. With new trends like agentic AI and sovereign AI, the pressure is on. The next competitive edge will come from orchestrating the whole AI stack.

FAQ About Types Of AI

How can enterprises determine which AI tier is best suited for their current business maturity?

Start by assessing readiness across the three types of AI. If basic data infrastructure doesn't exist or leadership can't articulate the problem being solved, no tier will work.

What budget considerations should executives account for when adopting pre-GNAI solutions?

The first of the three types of AI may appear cost-effective initially, but hidden costs include customization, retraining, and integration as business needs evolve. Allocate a budget for maintenance engineers and retraining cycles whenever your data or operations undergo changes.

How do regulatory and compliance requirements differ across AI tiers?

Among the three types of AI, Tier 1 systems are easiest to audit due to deterministic behavior. Tier 2 (LLMs) introduces uncertainty and requires robust monitoring to ensure outputs meet compliance standards. Tier 3 platforms bundle governance tools but still need legal validation across jurisdictions.

What are the hidden costs of implementing large language models in enterprise workflows?

The second of the three types of AI brings unpredictability: compute spikes, integration delays, and specialized staff for model supervision. Building guardrails and feedback loops often triples projected implementation timelines.

How do AI market trends influence strategic planning for digital transformation?

Trends may highlight all three types of AI, but not every business should adopt each tier simultaneously. Align adoption with operational bottlenecks and measurable KPIs. Success depends less on trend-following and more on matching the right tier to your business maturity.

What types of AI models exist beyond the three main tiers?

Beyond the three main tiers, some specialized models handle vision, speech, forecasting, and optimization—each built for different data types and problem structures. Most companies need combinations, not single models, which is where integration costs and complexity multiply fast.

.svg)

.webp)

%20(1).webp)

.webp)