Data pipeline is a whole ecosystem: ingestion services, ELT platforms, cloud integrations, microservices, and streaming processing.

That’s why cloud data pipelines and enterprise pipeline solutions have become a priority for companies that want to not just store large amounts of data, but also work with it quickly, accurately, and at scale—often by partnering with a data pipeline company that can deliver reliable infrastructure.

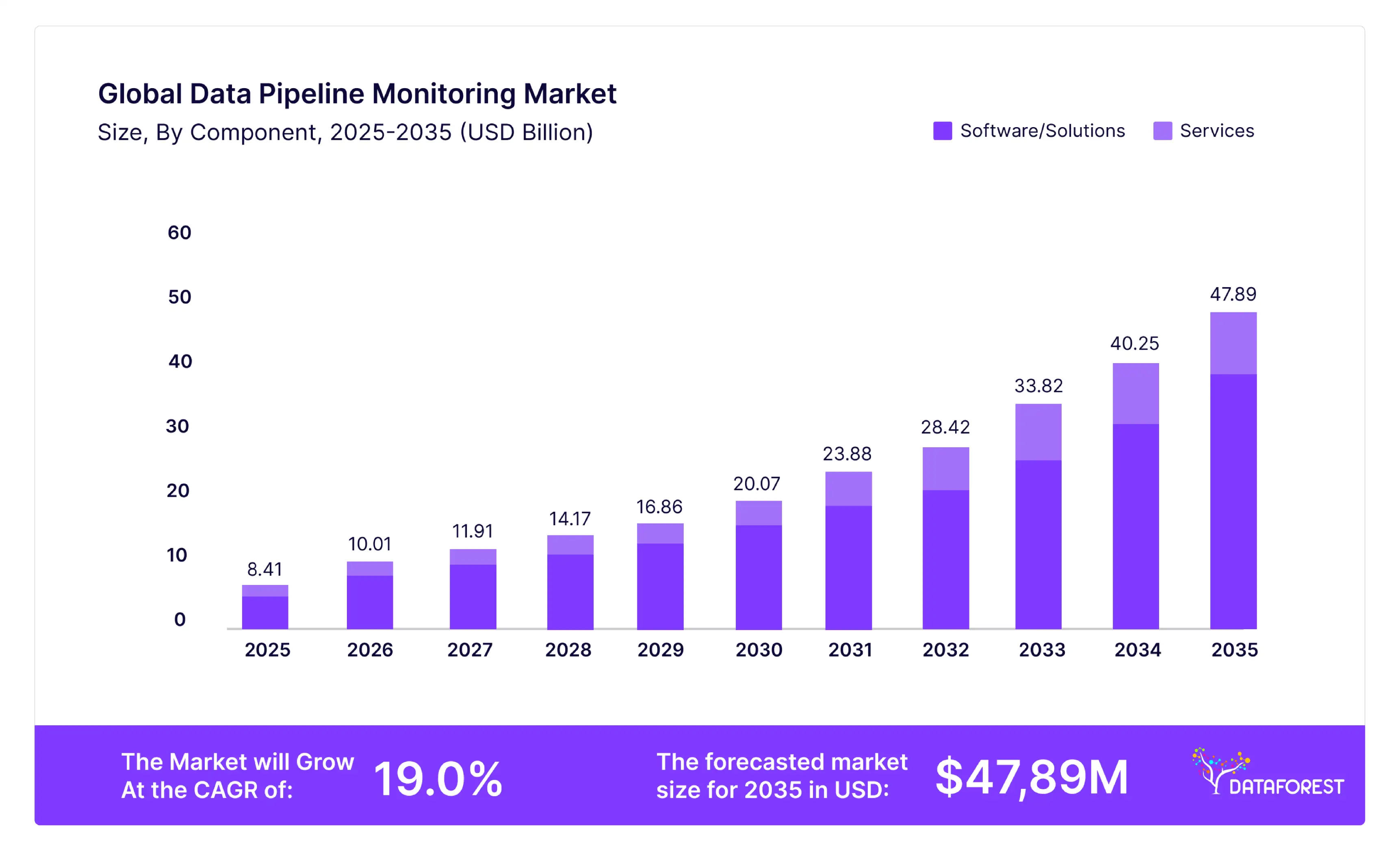

The market is overwhelmed with offers, from data engineering services to data pipeline company consulting services. In this article by DATAFOREST, we will review 10 leading pipeline vendors that have already proven their effectiveness in working with big platforms and have reliable solutions for complex infrastructures.

If you need an individual approach to a solution, book a call.

Why Data Pipelines Are Critical for Modern Enterprises

The world has become data-centric. In everything from e-commerce to medical research, datasets no longer just “help” decision-making. It drives everything from customer behavior to growth strategy—and the right data pipeline company ensures that info flows smoothly across systems.

Business Impact of a Robust Data Pipeline

Data pipeline company automation comes into play, allowing users to:

- instantly identify anomalies in user behavior

- personalize offers based on behavioral patterns

- reduce reporting time from days to minutes

- reduce manual work and the human factor in workflows

The result is a more flexible strategy, faster response to changes, and increased customer LTV, which is exactly what a modern data pipeline company helps enterprises achieve.

Common Enterprise Use Cases

The pipelines are needed not only for analytics. In large companies, they become the central hub that connects CRM, ERP, BI, logistics, payment systems, and dozens of other internal and external sources, typically designed by a data pipeline company specializing in enterprise integration.

The most common use cases:

- integration platforms for combining legacy systems with cloud solutions

- real-time data streaming supported by a data pipeline company for monitoring IoT devices or behavior in mobile applications

- ingestion pipelines for collecting events from websites, APIs, databases, third-party tools

- automatic ETL/ELT processes engineered by a data pipeline company for preparing figures for machine learning or reporting

ROI of Well-Built Data Infrastructure

Companies that invest in enterprise-grade solutions from a data pipeline company get:

- higher forecasting accuracy (thanks to cleansed and consistent data)

- faster delivery of valuable information to teams (instead of manual exports from 5 systems)

- lower support costs (because automation replaces manual crutches)

- greater confidence in data (when there is monitoring, alerts, SLAs, quality control)

In some cases, companies were able to reduce the cost of analytical processes by up to 40% simply by implementing automated cloud data pipelines through a data pipeline company.

Key Criteria for Evaluating Data Pipeline Companies

Scalability & Performance

Question number one: What will happen to your pipelines when data volumes grow 10x?

A professional data pipeline company must ensure that pipelines not only “hold the load”, but also dynamically scale—horizontally, automatically, without drops or bottlenecks.

Especially when it comes to real-time data streaming, where a delay of a few seconds can cost money or customers — something every enterprise-grade data pipeline company must address.

Cloud & Hybrid Compatibility (AWS, Azure, GCP)

Cloud data pipelines should work everywhere—in the cloud, on-prem, in a hybrid. The best data pipeline company solutions provide flexibility regardless of the client's infrastructure.

Here, it is important to evaluate:

- which cloud connectors are supported by the data pipeline company?

- whether there are native integrations with GCP, Snowflake, Redshift.

- whether it is possible to work in a multi-cloud architecture with your chosen data pipeline company.

Real-Time vs Batch Capabilities

Sometimes you need streaming—events from a mobile application in Kafka, processed in Spark in real time. And sometimes—nightly batches from SAP. The best data pipeline company platforms can work both ways.

Check if the data pipeline company supports:

- Streaming systems: Kafka, Flink, Pulsar

- Flexible frequency settings: from once a day to subsecond

- Queue control, error handling, idempotency

Security, Compliance & SLAs

A serious enterprise data pipeline company has:

- SOC2, GDPR, HIPAA support

- Column/table level access control

- SLA with uptime 99.9%+

- Audit and versioning capabilities for pipelines

Pricing & Enterprise Support

Not every data pipeline company is suitable for enterprises. Many startups attract customers with a low price but lack customization.

Enterprise customers need a data pipeline company that offers:

- a transparent pricing model (not always "per volume", sometimes - per connectors, executions, users)

- premium technical support

- the ability to order data pipeline consulting services for integration or optimization

- a product roadmap taking into account the needs of large organizations

Forbes highlights McKinsey & Company, BCG, and Bain & Company as top management consultancies across categories. While this Forbes ranking is about broad consulting excellence, these firms also advise on digital/data strategy and transformation that often includes pipeline modernization. Data and analytics strategy is increasingly part of how top consultancies differentiate in advising enterprise clients.

Top 10 Data Pipeline Companies for Enterprises

DATAFOREST

DATAFOREST is a product and data engineering company with over 15 years of expertise in business automation, large-scale data analysis, and advanced software engineering. We specialize in building custom cloud data pipelines, ETL/ELT platforms, and end-to-end architecture as a trusted data pipeline company for enterprises.

Key Solutions & Technologies

Advanced data ingestion services: API connectors, scraping, integrations with ERP/CRM, IoT.

Hybrid approach: cloud + on-prem, using Spark, Hadoop for large-scale data processing, serverless processes (Glue, ADF).

Real-time data streaming: Kafka, RabbitMQ, with event-driven architecture and retry/monitoring systems.

Business Impact

- Automation of ETL/ELT processes and reduction of manual work, allowing the business to focus on analytical value.

- Performance optimization: PostgreSQL tuning, reduction of IOPS-gorlers - projects that gave 40-65% acceleration of queries and savings.

Target Industries

DATAFOREST actively works with companies in e-commerce, retail, traveltech, finance, healthcare, and insurance. For retailers, they can create personalized data pipeline solutions that allow real-time updating of recommendation models, forecasting demand, and optimizing inventory. In healthcare, they can implement pipelines for medical record processing, integration with clinical systems, and patient analytics.

Why Choose Them

A full package: data pipeline development, infrastructure, analytics, ML, monitoring, and data pipeline consulting services, which allow businesses to delegate the entire cycle—from initial assessment to full implementation.

High customer satisfaction: 5.0/5 rating on Clutch and GoodFirms. Clients mentioned proactivity, efficiency, and great communication.

Transparent cooperation model with understandable budgets. You can book a free consultation with their team to discuss data pipeline solutions tailored to your specific needs.

Octolis

Octolis is a data pipeline company platform built as an all-in-one customer data platform that combines tools for connecting, processing, and synchronizing data in your own storage, a modern data stack.

Key Solutions & Technologies

On the Octolis platform users can:

Centralize: automatically collect data from CRM, Ads, POS, API, GSheet, or webhooks, both in batch and real-time.

Unify: build master datasets using no-code or SQL tools, with real-time deduplication and identity resolution.

Prepare: clean, transform, and evaluate data (e.g., RFM segmentation, scoring recipes) into already structured business datasets.

Share: synchronize processed data into marketing tools, CRM, BI, Google Sheets, or via real-time API and webhooks.synchronize processed data into marketing tools, CRM, BI, Google Sheets or via real-time API and webhooks.

Business Impact

Octolis is designed to accelerate the launch of marketing and operational initiatives: data collection, preparation, and activation in a matter of minutes.

Target Industries

Octolis is focused on customer-centric brands in e-commerce, marketing, CRM, SaaS, and branded segments.

Why Choose Them

A complete “out of the box” platform: from ingestion to activation, without the need to build a complex data engineering team.

Competitive pricing: starter plan at 700€ per month.

Imply

Imply is a data pipeline company focused on a high-performance database optimized for real-time data streaming. Their main product today is Imply Polaris, a fully managed cloud platform that allows users to run analytics applications out of the box without having to understand Druid in depth.

Key Solutions & Technologies

Stream and batch ingestion: Imply Polaris supports ingestion via both Kafka and Events API for real-time and batch channels.

Millisecond analytics: Thanks to columnar storage and indexing, queries are processed in sub-seconds, even on terabytes of data.

Business Impact

Speed of Response: Teams use Imply to instantly analyze millions of events, for example, to detect anomalies or monitor user behavior, with millisecond latency, almost in real-time.

Cost Optimization: Customers report a 50% reduction in operational costs and savings in engineering time.

Target Industries

Finance, e-commerce, IoT.

Why Choose Them

- Interactive analytical applications;

- Cloud management without complex configurations.

Hevo Data

Hevo Data is a data pipeline company offering end-to-end ELT with built-in transformations and a focus on code-free integrations and scalability for enterprise workloads.

Key Solutions & Technologies

150+ pre-built connectors to databases, SaaS, cloud storage and streaming services allows users to set up cloud data pipelines in 5 minutes without writing any code.

No-code and low-code transformations. Users can utilize GUI, Python scripting, and dbt support for data automation and data preloading, providing an analytics-ready format.

Business Impact

With Hevo Data, customers save over $60,000 per year and reduce total TCO by 50%, processing over 1PB of data per month. Also, the platform frees up 40 hours of engineering time per week, increasing team efficiency and reducing ETL costs by up to 85%.

Target Industries

E-commerce, fintech, healthcare, software, logistics, and digital products.

Why Choose Them

- Transparent pricing model.

- Enterprise-level security: end-to-end encryption, thorough role-based access model, VPN/SSH, private VPC connections—SOC2, HIPAA, GDPR-compliant.

Rivery

Rivery is a data pipeline company and a cloud-native SaaS platform for ELT platforms and data pipeline services. Rivery is a classic representative of cloud data pipelines and enterprise data integration platforms, with a balance between ease of use, integrations, and DataOps functionality.

Key Solutions & Technologies

150-200+ pre-configured connectors.

Reverse ETL support: transfer data back to CRM, marketing tools, Slack, BI systems using API/webhooks.

Business Impact

Rivery claims to help accelerate pipeline launch time by 7-8 times, while reducing DataOps costs by 33%.

Target Industries

Rivery is suitable for data-intensive organizations: tech companies, e-commerce, fintech, marketing, IoT, and BI-dependent cases.

Why Choose Them

- A complete DataOps platform;

- AI Assistant;

- Pay-per-use pricing model.

DataKitchen

DataKitchen is a data pipeline company specializing in data quality, data observability, and DataOps. It allows organizations to monitor, test, and run pipelines with minimal errors.

Key Solutions & Technologies

DataOps Observability & Automation: covers the entire data path—from ingestion to analytical representation—with automated tests, alert setup, and monitoring at every stage of the pipeline.

Environment Creation (Kitchens): the ability to create separate environments (dev/test/prod) automatically.

Business Impact

DataKitchen helps to almost completely eliminate errors in production. Customers report a significant reduction in DataOps cycle time and increased pipeline reliability.

Target Industries

The platform is ideal for organizations where data quality and continuity are critical: pharmaceuticals, fintech, e-commerce.

Why Choose Them

Zero-error DataOps platform: automation, monitoring, testing, avoiding critical Data Quality errors in production.

Open-source + enterprise: TestGen and Observability are available as open-source, as well as in enterprise versions with professional support and consultations.

Airbyte

Airbyte is a data pipeline company providing an open data integration and synchronization solution. Today, it has been installed over 200,000 times and is used daily in 7,000+ companies thanks to convenient mechanisms for building data pipelines in the cloud and on-premises.

Key Solutions & Technologies

600+ ready-made connectors (both open and certified), covering databases, APIs, SaaS, storage allow users to quickly launch ELT.

CDC (Change Data Capture) support: log-based replication via Debezium, which ensures the receipt of data changes in a mode close to real-time.

Business Impact

Airbyte minimizes the need for large ETL teams. The open-source model allows users to host their own solutions without licensing costs, and ready-made connectors accelerate integrations.

Target Industries

- E-commerce to unify customer data, transactions, marketing channels;

- Fintech & SaaS to secure and regular data transfer between systems;

- Healthcare to ensure GDPR/HIPAA compliance when synchronizing medical data.

Why Choose Them

- Extensive connector catalog: over 600 supported, both open and certified;

- CDC via Debezium: efficient change synchronization, no complete rewrite;

- Easy to extend: CDK allows you to quickly add new integrations.

StreamSets

StreamSets is a data pipeline company platform that enables a DataOps-oriented platform that enables users to create and manage smart streaming data pipelines through an intuitive graphical interface, facilitating seamless data integration across hybrid and multicloud environments.

Key Solutions & Technologies

Streams, CDC and batch: Data Collector Engine allows users to collect data from Kafka, JDBC, file systems, etc., and deliver it to Snowflake, ADLS, HDFS, S3 with flexible schema drift processing.

DataOps and monitoring: A single platform for launching, monitoring and managing pipelines with visualization and alerts for unreliable data.

Business Impact

StreamSets reduces pipeline development and maintenance time by 90% with ready-made connectors, auto-detection of schema drift and low-code approach.

Target Industries

The service is focused on fintech (fraud detection), e-commerce, IoT/telemetry, SaaS and analytical cases.

Why Choose Them

- Ready-to-use solutions from ingestion to delivery;

- Reliability before changes: the system automatically adapts to schema changes, warns about drift and mitigates risks.

- Full deployment flexibility: supports multi-cloud and hybrid infrastructures.

Accenture

Accenture operates as a global data pipeline company delivering cloud-scale pipelines to move information into cloud warehouses.

Key Solutions & Technologies

- Cloud First provides migration, infrastructure management, and sovereignty solutions across multi-cloud environments.

- Data and AI focus on scaling generative and agentic AI models to automate business processes and improve decision-making.

- Industry X uses digital twins, IoT, and robotics to modernize engineering and manufacturing operations.

Business Impact

Accenture builds artificial intelligence and cloud systems for 9,000 global clients to increase revenue and speed. These projects produce measurable gains like 15% lower costs in retail and 30% better retention in healthcare.

Target Industries

Global firms in banking, healthcare, manufacturing, retail, and energy.

Why Choose Them

- The firm employs 784,000 experts to manage global data estates through deep partnerships with AWS, Azure, and Google Cloud.

- They build custom AI models that help 90% of Fortune Global 100 companies cut costs and find new revenue.

Infosys

Infosys is a data pipeline company delivering automation via Topaz and Cobalt software to move and process large amounts of information for cloud systems.

Key Solutions & Technologies

- Topaz uses agentic AI to automate business tasks and process complex data sets.

- Cobalt provides tools for moving large workloads to hybrid and multi-cloud systems.

- Aster applies AI to marketing data to track customer behavior and improve sales.

Business Impact

Infosys enables 1,861 global clients to reduce costs and accelerate digital transformation through its large-scale automation and AI platforms. These initiatives deliver tangible results like a 35% lower cost of ownership for major retailers and over $100 million in annual value for consumer goods manufacturers.

Target Industries

Global enterprises in financial services, retail, manufacturing, energy, and communication services.

Why Choose Them

- The company uses the Topaz platform to deploy agentic AI that automates complex tasks and cuts costs for global firms.

- The firm maintains over 12,000 AI assets to help leaders move legacy data to multi-cloud systems using Cobalt tools.

Data Pipeline Companies Comparison Table

Select what you need and schedule a call.

Final Thoughts

There is no universal solution for building a pipeline. It all depends on how your business works and what data you rely on. Some people value flexibility and the ability to write connectors on their own, while others value quick startup and minimal configuration. But what all these platforms have in common is an attempt to relieve the burden on teams that have to work with figures every day. Choose not the “best” system, but the one that suits you.

If you’re looking for a data pipeline company, fill out this form, and our team at DATAFOREST will reach out to you shortly for a consultation to discuss your specific use cases.

FAQ

What’s the difference between ETL and pipeline tools?

ETL is a distinct approach to processing, with an emphasis on the sequence of "extract - transform - load". Pipeline platforms used by leading data pipeline vendors cover a wider range of tasks: transmission, monitoring, quality control, orchestration, and work in both batch and stream modes. ETL is just one possible scenario within the framework of a pipeline supported by big data platforms.

Can these tools handle real-time streaming?

Yes, most modern platforms support streaming processing (CDC, Kafka, streaming API).

Are open-source solutions viable for big business?

Yes. If there is a technical team, open-source platforms provide more control and flexibility without licensing restrictions.

Which industries most commonly use pipelines and why?

Fintech, e-commerce, SaaS, media, healthcare. Their info is constantly updated, and real-time analytics is needed, which is why enterprise data pipeline solutions are widely adopted in these sectors.

What types of cloud integrations are offered by leading pipeline companies?

AWS, Azure, Google Cloud, Snowflake, BigQuery, Redshift—most common integrations supported by data pipeline providers.

What pricing models do top pipeline companies usually offer?

Common options include subscription (monthly/annual) or a contract model for enterprises.

.webp)

%20(1).webp)

.webp)