An e-commerce company struggled with customer support. They incorrectly routed 40% of their tickets. Customers waited three days, so they became frustrated. The company started using multimodal conversational AI. This system understood customer intent across chat, email, and voice. It routed tickets automatically. It passed issues to a person when necessary.

Two months later, the results were clear. The company resolved 78% of issues on the first try. Support costs fell by 35%. The time spent on each contact dropped from 12 minutes to four minutes. We can set up a call to help you achieve the same goal.

How to Manage AI That Sees and Hears

Your business makes text, images, video, and voice every day. Older AI systems handle only text. Multimodal conversational AI processes all these types of data at the same time. This ability creates new chances for business growth. You must know what these chances are.

What Multimodal Means

Single-modal AI has limits. It works if you give it text. It fails if you give it a video.

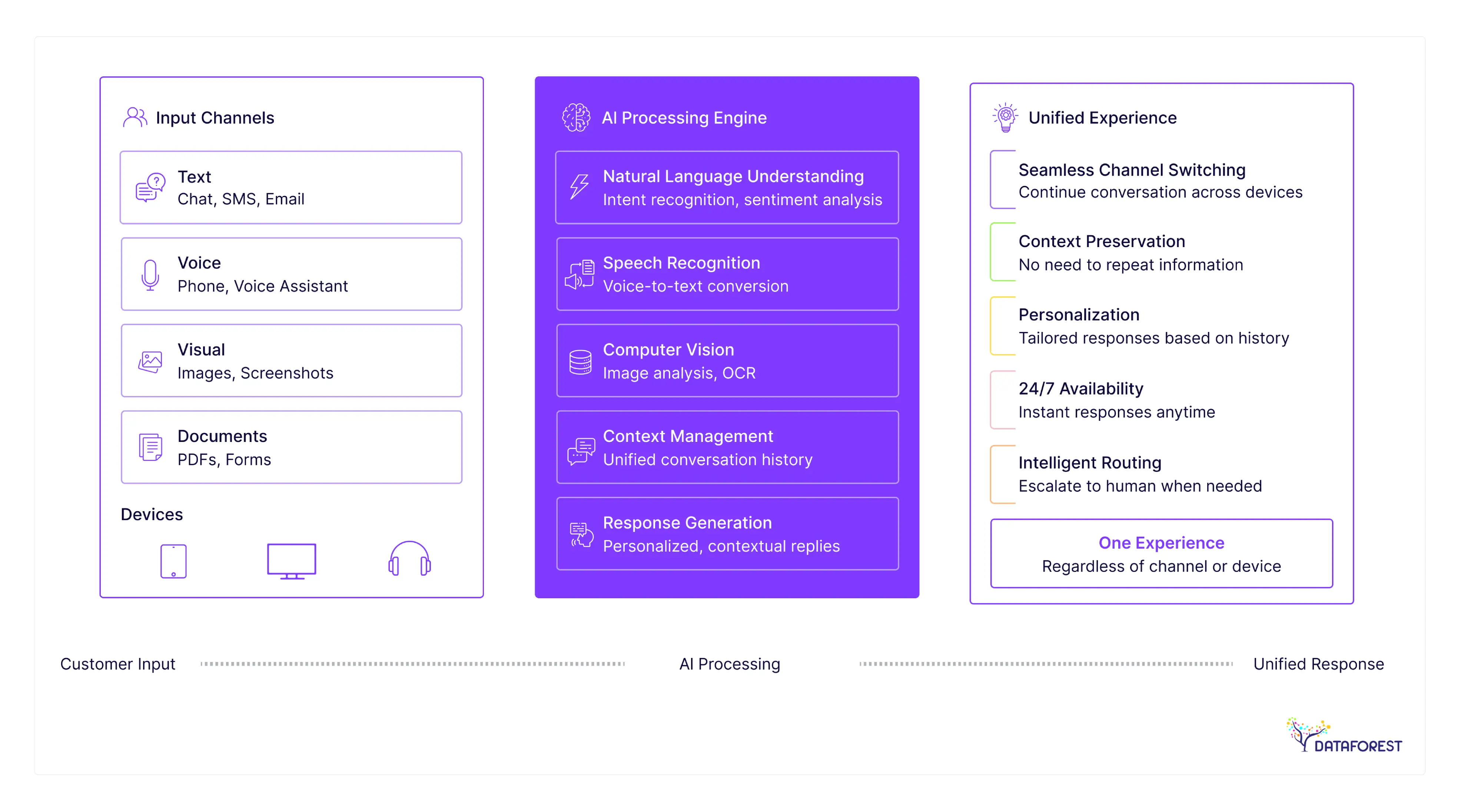

Multimodal conversational AI systems process many data types together. The system turns video into pixels, sound, and sequences. This gives the AI context. The system understands the relationship between what it sees and what it hears. This matters because customers do not use only text to communicate. They send screenshots. They leave voice notes. They explain problems through video. Single-modal systems miss all this information.

Multimodal conversational AI uses more computing power. They work slower. They cost more money to run.

Take customer support, for example. Text-only chatbots do not hear tone of voice. They do not detect anger. Multimodal conversational AI does this. They process voice along with the words. Anger does not get ignored.

Core Parts of Conversational AI

Multimodal conversational AI uses parts that cannot work alone. Input encoders turn raw data into numbers. They convert images into arrays of data. They change audio into spectrograms. Each encoder handles one type of data. The system then lines them up. It puts the data into a single computational area so the data can work together.

The reasoning layer does the real work. Here, the AI finds patterns. It weighs relationships between the data. Does the tone of voice match the image? Are the signals contradicting each other? The reasoning layer decides what information is most important. It throws out the rest.

Output generation creates the final response. This might be text. It might be a video clip or an audio response. The system must turn its understanding into the format people use.

This system structure creates three operational problems for Multimodal conversational AI:

- Data problems. Video frames, audio parts, and text do not arrive at the same time. This lack of timing breaks the context. The system fails.

- Cost problems. Processing many data streams at once needs expensive hardware. Most projects do not need this much cost. You must solve the right business problem first.

- Quality problems. Multimodal conversational AI only works as well as its weakest encoder. Blurry images reduce the quality of everything. Bad audio ruins the result. One weak part breaks the whole system.

McKinsey & Company wrote about this on June 10, 2025.

This change moves customer experience past the "chatbot answers text" stage. The new system is an assistant that understands context across all media through multimodal conversational AI.

Why Businesses Adopt Conversational AI

Your customers stopped using one channel years ago. Businesses still manage email, chat, voice, and video in separate groups. Conversational AI forces them to unify.

Building One System That Works

Retailers built omnichannel experiences by connecting separate parts. The website, store, and app each had their own system and data. Shoppers switch between moments: scrolling on a phone, visiting a store, checking email. They expect one single experience.

The shift to unified tools means one customer file. It means one view of data. It means one brand voice everywhere. This requires getting rid of separate systems. Inventory, order processing, and history must all match up. Purchase data must follow this plan. Prices and promotions must be the same everywhere.

Most companies treat channels as separate processes. The companies that win are not those with more places to reach customers. The winners are the ones where channels stop existing entirely. With multimodal conversational AI, all problems go away.

The Value of Conversational AI

Multimodal conversational AI cuts support costs. It handles text, voice, and video through one unified system. This removes the need for separate teams. Cost savings happen right away. Customer resolution speeds up because of fewer handoffs and instant context.

Companies often miss the main point. They chase cost cuts instead of more revenue. The real return comes from personalizing service for many people. Multimodal conversational AI learns what customers like. It anticipates what they need. It sells extra products intelligently. Doing this across millions of interactions completely changes profit margins.

Your AI Sounds Human, Competitors Sound Like Robots

Multimodal conversational AI gives you a market edge. It delivers experiences competitors cannot copy. It offers instant, clear responses across text, voice, and video in one place. People start seeing the brand as intimate instead of just transactional. Customers feel truly understood.

The system remembers past context. It learns what people prefer. It predicts needs before customers ask. Speed becomes something the customer does not even notice. Execution matters more than just having features. Brands that use multimodal conversational AI look smarter and more attentive. Competitors stuck in separate channels look old-fashioned.

Adopting this creates a growing advantage. Early users build better data sets. They train their AI on customer patterns. They grow their lead with every interaction. The perception solidifies quickly. One brand feels modern and human. Others feel fragmented and slow. Within eighteen months, the gap is almost impossible to close.

How Does Multimodal Conversational AI Change Industries

AI is now more than just chatbots. Multimodal conversational AI mixes text, voice, images, and video. This feels natural. It is like talking to a person who can see what you point at.

Retail and E-commerce

Shoppers take photos of items they want. The multimodal conversational AI quickly finds the item. This ends the need to scroll through endless pages. The system sees small details in milliseconds. It recognizes fabric texture or the shape of a shoe heel. The old keyword search is gone.

You show the picture, and you tell the system what you need. The multimodal conversational AI compares the visual data to the product inventory. It keeps the conversation flowing naturally. It does not ask robotic questions. It performs searches instantly by price and category. The system finds the vintage dress you saw on Instagram. It adds the item to your cart before you finish your coffee. It also works with voice commands: say it, photograph it, buy it.

ASOS used multimodal conversational AI for visual search. People downloaded ideas from street style or TV shows. Over three months, shopping cart values went up by 27%. People buy more when they find exactly what they want. Returns dropped by 22%. Customer satisfaction rose sharply.

Banking and Finance

Banks have always faced a conflict between security and ease of use. Multimodal conversational AI solves this problem. These systems combine voice patterns, face recognition, and how a user behaves. This creates a secure login that is easy for the real user. The system processes documents during the conversation. You do not have to wait while they check your statement. The AI sees your uploaded mortgage statement. Multimodal conversational AI processes the numbers. It continues the conversation without a pause.

JPMorgan Chase set up a multimodal conversational AI assistant. This assistant handles $4.7$ million interactions each month in retail banking. Wait times fell dramatically by 85%. The time needed to sign up a new customer dropped from seven days to 36 hours. Fraud attempts decreased by 41%. The multimodal check system recognizes fake identities faster than people do.

Manufacturing and Utilities

Multimodal conversational AI systems work well here. A technician points a phone at a broken machine part. He describes the problem briefly. He immediately receives the diagnosis and repair steps shown on the screen. The system uses visual checks and vibration analysis. It also uses thermal patterns. It finds problems the human eye cannot see. Technicians do not need to type with dirty hands.

Siemens used multimodal conversational AI for maintenance across its factories last year. This was a practical fix, not just a fancy technology. Unplanned machine downtime fell by 43%. The average repair time decreased from $4.2$ hours to $1.8$ hours. An unexpected benefit was fewer safety incidents. These incidents dropped by 28%. Technicians spent less time in dangerous areas troubleshooting. The value gained was higher than the initial cost calculations.

Boston Consulting Group (BCG) – AI Agents Open the Golden Era of Customer Experience (Jan 13, 2025)

For unified CX: The agent must cross channels and touchpoints (hardware, mobile, in-store, voice) rather than being isolated—a hallmark of multimodal conversational AI.

How to Build Conversational AI That Works

Teams often keep data in separate systems that do not share information. Getting AI to use images, text, and voice means fixing this first. If you do not fix the data, everything else fails. Multimodal conversational AI depends on it.

Data Integration Stops You from Guessing

Support systems have one customer record. Sales has another. Finance keeps a third. If you feed this contradictory data to a system, it learns the contradictions. Nobody wants to integrate data. It is slow, and it costs money. Company leaders do not get excited about this work.

If you skip integration, your multimodal conversational AI confidently makes wrong predictions. It works fast, but it works wrong. Integration forces you to fix these contradictions. Which system holds the truth about a customer? You must decide. Then you must enforce that decision. This is the true challenge, not the technology itself. If you skip this step, everything else fails. Models trained on false data produce false results faster.

Machine Learning and Analytics Need Each Other

Analytics show trends. Machine learning takes action. Neither tool works alone in a live system. You must measure the right things. Do not measure internal tasks. Measure wait times, customer loss, and how often issues need an expert. Pick one thing. Measure it. Connect it to what customers do. Train models on real evidence. You will get better results.

Teams often measure activity instead of real results. Their multimodal conversational AI then fails. They blame the computer program. The actual problem was with their data long before the training started.

Do Not Let Your Data Be the Villain

Your customer experience system is only as smart as the data it uses. Wrong information leads to bad outcomes for multimodal conversational AI. The following steps are important:

- Check the inputs. Block bad data before it enters the system. Check for the right format, completeness, and duplicates right away.

- Encrypt the data. Customer data must be encrypted in multimodal conversational AI pipelines.

- Manage access well. Use role-based permissions. Not everyone needs access to all data. Use audit logs to track who sees what.

- Include legal rules. Use rules for privacy laws like GDPR and CCPA when needed. Manage customer consent automatically. Apply data deletion rules automatically.

- Secure the data. Remove duplicate files regularly. Delete old records. Check that data matches across systems.

- Plan for breaches. Assume a breach will happen. Put detection systems and containment plans in place. Prepare procedures for notifying customers now.

Customers trust you with their data. One breach, one misuse, or one fine will destroy that trust. Trust does not grow back quickly the way technology does.

Forbes – “The AI Customer Experience Revolution: A New Era of Human-Centered Intelligence" (Aug 6, 2025)

AI in CX is shifting from automation to emotionally aware interaction, with multimodal systems now able to detect sentiment and adjust response modality.

What Stops Your Conversational AI?

Building multimodal conversational AI seems perfect in theory. In practice, three problems appear fast. If you ignore them, they will weaken the whole system.

Your Data Lives Separately

Companies store chat records in one place. They keep call recordings in another. They store email talks somewhere else. Systems cannot learn patterns across different channels when the data is separate. Start by getting rid of these separate storage areas. Put input data into one central layer. The multimodal conversational AI models can then see the complete customer history.

The Major Challenges

Multimodal conversational AI seems like the best possible tool for customer service. Voice, text, and visual data all work together. When you start building it, you find messy challenges. These challenges include disorganized data, models that favor certain groups, and people who do not know their new job role.

DATAFOREST will do the same; you need to arrange a call.

Can AI Improve Customer Experience, or Just Fix Problems?

Multimodal conversational AI promises a service that is faster and costs less. This technology also shifts complexity to a different place.

AI That Sees, Speaks, and Understands

Voice recognition works very well now. Most customers will not notice they are speaking to a computer. Visual models now analyze documents. They verify customer identity. They read damaged labels without a person helping. Natural language processing handles unclear language better than old rule-based systems. This means fewer messages that say, "I did not understand you."

New Ways of Working

Multimodal conversational AI uses text, voice, and image processing together in one interaction. This reduces the number of times a customer switches channels. It improves the time needed to fix the problem. Predictive routing checks the conversation context. Then it assigns the right employee to the customer. Tone detection finds frustration early in the call. It often misidentifies sarcasm and cultural differences. Vendors rarely admit this fact.

Creating a Unified Customer Experience

Connecting all the systems remains the hardest task. Multimodal conversational AI tools work well by themselves. They struggle when old systems do not share data correctly. A unified experience depends less on what the AI can do. It depends on whether companies rebuild their technical structure to support multimodal conversational AI. The technology exists. The desire of organizations to drop old systems and retrain staff is often missing.

DATAFOREST Improves Customer Service with AI

DATAFOREST builds chatbots and virtual assistants powered by multimodal conversational AI that work all day, every day. These tools lower the amount of work for your support team. They reduce how long customers must wait for a response. Our bots do not feel robotic because the connection to your systems is very deep.

We connect multimodal conversational AI to your current databases. This includes product lists, past chat records, and user details. The AI gets the context before it replies. This means fewer standard answers. The replies reflect past talks between the customer and your company. This is a correctly built data structure.

The team checks for patterns in the conversation records. They find common trends. You start seeing what customers will ask before they ask it. You can offer service before a problem starts. This results in more sales and fewer firefighting reactions.

Automating the common talks with multimodal conversational AI lets people focus on important issues and unusual cases. Machines handle a large number of requests. People handle the difficult problems.

Please fill out the form if this business change makes sense for your company.

Additional Questions on Conversational AI In Customer Experience

How can multimodal conversational AI improve cross-department collaboration within an enterprise?

It removes barriers between teams. It shows voice, text, and visuals in one system. Marketing sees the data support. Product knows what sales promised. All departments use the same facts. They stop guessing.

What are the cost implications of adopting multimodal conversational AI compared to traditional automation tools?

The starting cost is higher. It returns its value quickly. Teams spend less time on manual work. Scaling becomes easy. This creates the true savings.

How can enterprises measure the success of multimodal conversational AI initiatives beyond customer satisfaction metrics?

Measure specific changes, not just feelings. Focus on accuracy and time to finish tasks. Check consistency across platforms. See how quickly teams work.

Can multimodal conversational AI connect to ERP or CRM systems? Does this require a full infrastructure change?

Yes, it connects. APIs move data between systems. Middleware links them. You do not rebuild your stack. You make it perform better.

How does multimodal conversational AI contribute to data-driven decision-making at the executive level?

It clarifies confusing information. Every customer action adds detail to the full picture. Leaders see trends early. They find opportunities first.

How can multimodal conversational AI be used to personalize B2B customer relationships, not just B2C interactions?

It remembers the full relationship history. This includes old projects and tone preferences. This knowledge lets the AI respond like someone who knows the client well.

.svg)

.webp)