The Strategic Imperative for Scalability

In today's executive suites, the conversation around Artificial Intelligence has shifted from "if" to "how fast." The allure of AI—from hyper-personalized customer experiences in e-commerce to predictive maintenance in utilities—is potent. This is not just perception; the data confirms it. A 2022 McKinsey report highlighted a glaring gap: while AI adoption climbs, most companies can't break their models out of the lab to create enterprise-wide impact. The algorithms aren't the culprits. The real drag, the silent growth killer, is the very foundation of data and infrastructure these models depend on. True scalable AI for business isn't born from a clever model; it's engineered from a robust, efficient, and AI-ready data infrastructure.

Why Scalable AI is Crucial for Business Growth

Scalability is the bridge between a successful AI experiment and a transformative business capability. An AI solution that works for 1,000 data points but collapses under a million is a failed investment. For modern enterprises, scaling AI is synonymous with competing effectively. This capability is what separates market leaders from the pack, letting them pivot into new markets, launch products, and streamline operations at a pace competitors can't touch. Without that foundation, you're stuck in "pilot purgatory"—forever watching the future unfold instead of actively shaping it.

The Role of Efficient Data Management in AI Success

There's a simple, brutal truth in our field: AI is only as good as the data it's fed. The old 'garbage in, garbage out' rule isn't just a saying; it's a fundamental principle in machine learning. You can own the world's most sophisticated neural network, but it becomes a useless black box the moment it consumes a diet of inaccurate, siloed, or inaccessible information. Treating data management as a mere preliminary step is a critical error. It's the very bedrock of an AI strategy built to last and scale. When your data flows cleanly, models train faster, their predictions sharpen, and your leadership team gains the confidence to use those insights for high-stakes decisions.

Diagnosing the Problem: Data Optimization and Infrastructure Challenges

When AI investments don't yield expected returns, the issues almost always trace back to a flawed data architecture and foundational cracks in the data ecosystem. Pinpointing these symptoms is the first move toward building a truly scalable AI solution. If any of these sound familiar, your data management is likely holding back your AI ambitions:

- Data Silos: Information lies locked away in disconnected departments and legacy systems, making a unified business view impossible. It's a pervasive issue we regularly address for clients across sectors, from finance to healthcare.

- Poor Data Quality: When data is messy, inconsistent, or just plain wrong, it poisons your models and erodes any trust in the insights they produce. Gartner research has suggested the average financial hit from poor data quality is a staggering $9.7 million per year.

- Lack of Real-Time Capabilities: Relying on dated batch processing means missing immediate opportunities. Modern AI requires a flow of real-time data to power intelligent actions.

- Complex Data Integration: Manual and brittle integration processes starve AI development teams of the fuel they need. This is why services like our SaaS data integration are critical.

Challenges in Scaling AI Solutions

Even with clean data, the infrastructure itself can be a roadblock. "Pilot purgatory" is often a direct result of an infrastructure not designed for production-level demands.

- Compute Bottlenecks: Training complex models requires an immense and reliable infrastructure for machine learning. An on-premises setup may not have the elasticity for larger datasets or high-volume inference requests.

- Rigid Architecture: Monolithic systems make it incredibly difficult to integrate new AI services without risking system-wide failure.

- Security and Compliance at Scale: As AI systems touch more data, ensuring robust security and regulatory compliance becomes exponentially more complex.

- Inefficient MLOps: A lack of automated processes for deploying, monitoring, and retraining models (MLOps) turns a dynamic AI system into a static one.

The Cost of Inefficient Data Management

The fallout from a shaky data foundation extends far beyond frustrating your data science teams. It shows up in black and white on the balance sheet.

How Poor Data Management Impacts Business Efficiency

Think of an inefficient data infrastructure as a hidden tax on every single data-driven action in your company. Your analysts burn the clock just trying to find and prep data instead of finding insights. AI models crawl through training that should take days, not weeks. Your entire organization is running on stale information. This operational friction leads to wasted resources, inflated project timelines, and an inability to operate with agility. Our work on infrastructure cost optimization often reveals millions in these hidden inefficiencies.

The Hidden Costs of Slow Decision-Making and Missed Opportunities

Think of it this way: while your competitor is making moves based on what's happening right now, you're steering the ship using last quarter's map. That's not a gap; it's a chasm. Slow decisions, born from a weak data backbone, are how market leaders become footnotes.

Solution Strategies: Building a Robust Data Infrastructure for AI

Getting past these hurdles demands a fundamental shift in thinking: you must treat your data infrastructure for AI as a core business product, not just an IT line item. That means embracing modern tools and architectures built for agility, velocity, and true big data and AI scalability.

Key Technologies for Scalable Data Infrastructure

Forget looking for a single silver-bullet tool to solve your AI data problems. A truly AI-ready data infrastructure is more like a well-drilled team than a solo superstar—it's an ecosystem of technologies working in concert. At the heart of that team, you'll almost always find:

- Cloud Platforms: The major hyperscalers like AWS, Google Cloud, and Azure are the default for a reason. They offer elastic compute, storage, and managed AI services that create the ideal cloud infrastructure for AI. We've explored the strengths of various top cloud providers for AI.

- Data Lakehouses: Platforms like Databricks and Snowflake combine the scale of data lakes with the performance of data warehouses, unifying BI and AI workloads.

- Stream Processing: Tools like Apache Kafka are the central nervous system for real-time data, enabling ingestion and analysis on the fly to power immediate, intelligent decisions.

- Containerization & Orchestration: With Docker and Kubernetes, AI applications get packaged into portable containers, which guarantees they run the same way everywhere—from a developer's laptop to a massive production cluster.

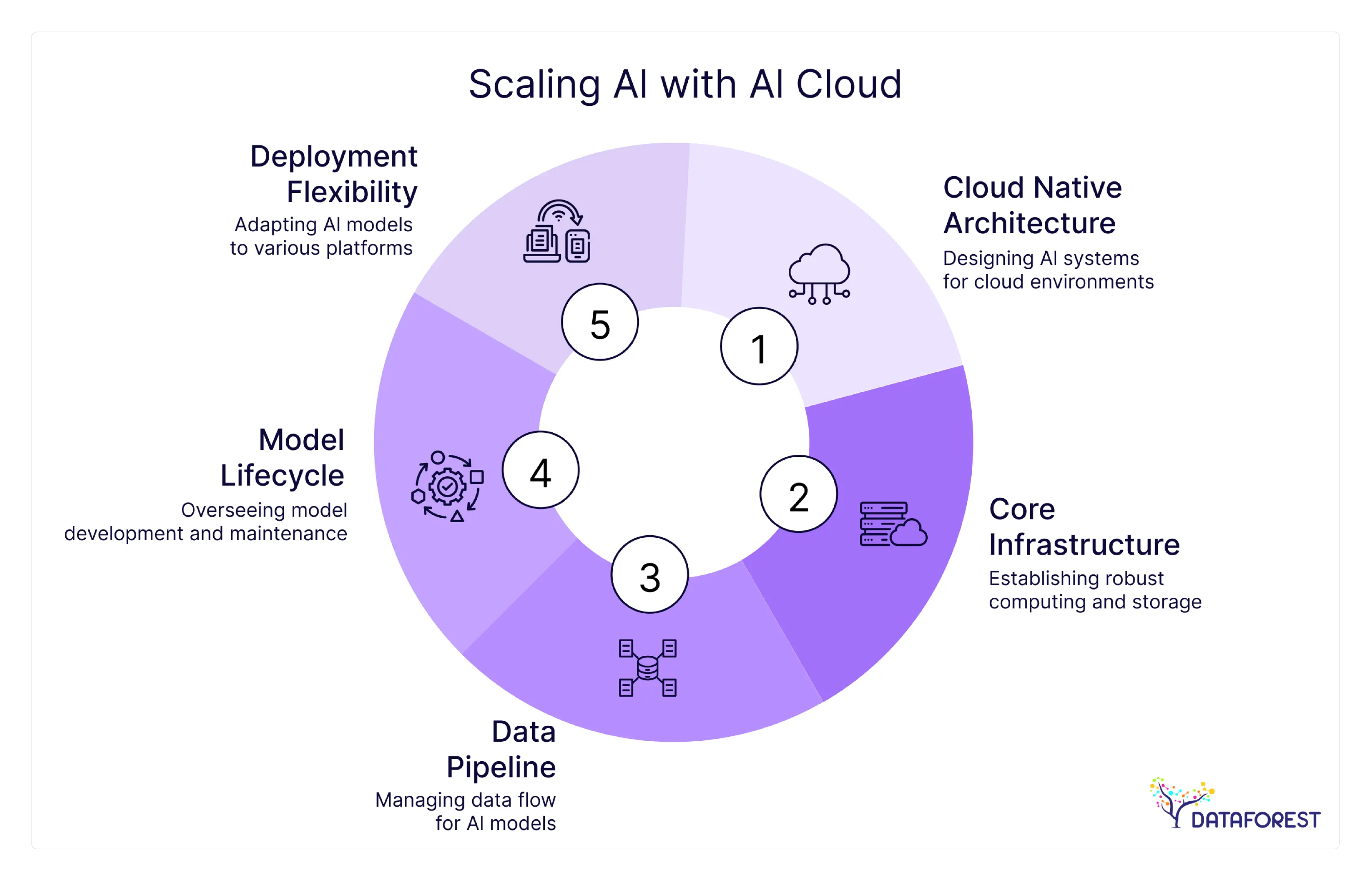

A well-designed cloud architecture integrates these components into a seamless engine for AI.

How Microservices Support Seamless AI Integration

A microservices architecture is a game-changer for scalable AI. Instead of one giant, tangled application, functions are broken into small, independent services. For an AI system, this agility means your recommendation engine can be retrained and deployed without anyone in the billing department even noticing. That flexibility to work on one piece and not have to break another is what unlocks real speed, allowing teams to push out new AI capabilities without being bogged down in bureaucracy. This is the core of our DevOps-as-a-Service belief.

Case Study: AI-Powered Solutions in Action

Let's move from theory to reality. We worked with a major e-commerce platform that was flying blind. Their data was a mess of disconnected silos, giving them no real insight into what their customers were actually doing. Our team at DATAFOREST partnered with them to build a scalable AI platform.

The initiative included a cloud-based data lakehouse solution to consolidate data streams, construction of machine learning models for forecasting and personalization, and a custom web platform to deliver insights. This led to a 40% increase in forecast accuracy and a 15% lift in marketing ROI. This case, detailed in our AI-Powered Web Platform for E-commerce Decisions study, proves how a solid data foundation sparks real, transformative business outcomes.

Key Benefits of Optimized Data Infrastructure for AI

Putting in the work to build a modern, efficient data infrastructure isn't just an IT project; it's an investment that pays for itself over and over.

Faster AI Model Training and Decision-Making

When data is clean and pipelines are automated, the cycle time from idea to deployed model shrinks dramatically. Data scientists can experiment more rapidly, and models can be retrained continuously. This speed translates directly into business agility, allowing the organization to respond faster to market changes. Our streamlined data analytics projects often cut insight delivery time by over 50%.

Cost Reduction and Improved Scalability

A modern, cloud-native AI infrastructure is inherently cost-effective. The cloud's pay-as-you-go model, combined with auto-scaling resources, means you stop paying for idle capacity. When you automate your data pipelines and MLOps, you also do more than just save time. It liberates your most talented people from the drudgery of system maintenance and unleashes them on what they do best: innovate.

How to Get Started: Implementing Scalable AI Solutions

Embarking on the journey to scalable AI is a serious strategic move that demands a clear, deliberate plan—not a disruptive, one-time overhaul, but a phased process that builds momentum.

A Phased Approach to AI Implementation

We walk our clients through a structured journey that de-risks the transformation:

- Assessment & Strategy: Get an honest look at your current data maturity. Pinpoint a high-value business problem that AI can solve and draw a clear roadmap to get there.

- Foundation & Pilot: Build the foundational data platform for a specific, high-impact use case to prove its value.

- Scale & Industrialize: Leverage the patterns from the pilot to scale the solution across the organization.

- Optimize & Innovate: Continuously monitor performance, optimize costs, and explore new AI capabilities.

Selecting the Right Technology for Seamless Integration

Picking the right tech—and more importantly, the right partner—is make-or-break. You need a partner who gets the full picture, with deep expertise across data engineering, cloud architecture, and machine learning. At DATAFOREST, our team blends high-level strategy with the hands-on engineering chops needed for building scalable AI systems that last.

Forging Your AI-Powered Future

The conversation around AI is no longer about parlor tricks or isolated experiments. The real competitive frontier is in the ability to industrialize and scale AI across the entire business. This is not a technical challenge for IT, but a C-level strategic imperative.

Building a robust, efficient data infrastructure is the single most important investment you can make in your company's future. It determines whether your AI initiatives will become a true engine of growth or a series of costly projects. The time to architect your future is now.

Ready to build the foundation for your scalable AI vision? Contact us to start the conversation.

Frequently Asked Questions

Why doesn't our current AI scale as expected despite successful pilots?

This is the classic "pilot purgatory" scenario. A pilot succeeds in a controlled environment. Scaling fails when the infrastructure cannot handle the production-level Volume, Velocity, and Variety of data. The architecture that supports a pilot is fundamentally different from the robust, elastic, and automated AI infrastructure required for enterprise production.

How can I evaluate whether my infrastructure is AI-ready?

An AI-ready infrastructure assessment evaluates several key areas: data accessibility and unification, scalability and elasticity of resources, automation of DataOps and MLOps pipelines, and robust governance and security. Our performance troubleshooting services can provide a deep analysis of your system's readiness.

How do DataOps and MLOps improve AI scalability in large organizations?

DataOps automates data pipelines, ensuring reliable and timely data for AI models. MLOps automates the machine learning lifecycle (training, deployment, monitoring). Together, they create a highly efficient "AI factory," allowing organizations to rapidly manage hundreds of models at scale, a topic we explore in our blog on scaling AI from the inside out.

Can I build scalable AI on-premise, or is cloud infrastructure a must?

While technically possible, building scalable AI on-premise is difficult and expensive due to massive upfront hardware investment and the loss of cloud elasticity. Cloud infrastructure is the standard for building scalable AI systems because it provides unlimited scale on demand and a consumption-based cost model. A cloud migration strategy is the most effective path.

What are the top infrastructure bottlenecks that kill AI speed and accuracy?

The top three bottlenecks are:

- Storage I/O: Traditional storage can't feed data to GPUs fast enough, wasting expensive compute cycles and slowing model training.

- Data Movement: Inefficiently moving large datasets between storage and compute clusters creates significant delays.

- Manual Processes: A lack of automation in data preparation and model deployment introduces human error and slows the entire AI lifecycle. A streamlined big data cloud infrastructure eliminates these friction points.

.webp)