Demystifying the AI PoC: What it Actually Means on Day 1

In today’s corporate world, not using AI is no longer an option. The pressure is on for leaders to ‘have’ AI, but giant multi-million dollar initiatives fail before they start. The AI PoC is that critical solution bridge between a heady concept and an efficient, scaled AI one.

But what is AI PoC? This is not a technical test, but an instrument for business validation.” This is essentially a targeted look at feasibility to answer just one question — as rapidly and as cost-effectively as we can: Does this particular AI project provide quantifiable value for what makes our business unique?

A good PoC is a foundation for an AI strategy. It’s the act of trading in “what if” for “we have validated,” derisking your investment and making a compelling business case around that AI adoption adventure.

Why AI PoC is important for enterprise innovation & ROI

Emergence of AI Business Models

Now, AI can be used for more than just automating back-office work. It is the crucible for new types of business models, from personalized retail customer experiences to predictive maintenance in manufacturing. An AI PoC is your “no big thing” on-ramp to these brave new streets — now you can test pioneering revenue models without risking the farm.

Why PoC Is a Risk-Reduction Tool, Not Just a Pilot

A pilot is a small-scale implementation of a vetted product. A PoC is a targeted experiment to find out if the core hypothesis is feasible. The objective of a PoC in AI is to enable rapid validation—or "fail fast"—cost-effectively. It’s a thorough risk assessment that validates your core assumptions—technical, data, and business—before you make a budgetary commitment, providing an opportunity to quantify the potential return on investment (ROI) or lose significantly less if it doesn’t work out.

Typical Pain Points AI PoC Can Address (Over-spend, Slow Ops, Manual Decision Making)

An AI / ML PoC is clear in its target and quantifies solutions to one or more critical business challenges:

- Budget Optimization: It prevents you from wasting money on “AI for AI’s sake.”

- Slow Ops: It can demonstrate how workflow automation (e.g., invoice processing) helps to cut process times from days to minutes.

- Subjective Decision-Making: It validates the move from reactive 'gut-feel' decisions to proactive, data-driven ones.

When Your Company Actually Needs an AI PoC – And When It Doesn’t

Signs Your Organization is Ready for AI Integration

You are ready for a PoC if:

- You have a specific, high-value business problem (e.g., “We leak X% of customers due to churn”).

- You have an executive sponsor on the business unit, not just in IT.

- You have a clear understanding of where your data resides, even if it’s not perfect.

When it doesn’t make sense to do a PoC

Don't launch a PoC if:

- The solution is a commoditized product. You do not need a PoC for testing a standard spam filter. Just buy one.

- There is no well defined business question for you to answer. “We need to 'use generative AI'” is not a plan; it’s a vague objective.

- You have zero data. When the data does not exist, or is legally inaccessible, a PoC is dead as soon as it arrives.

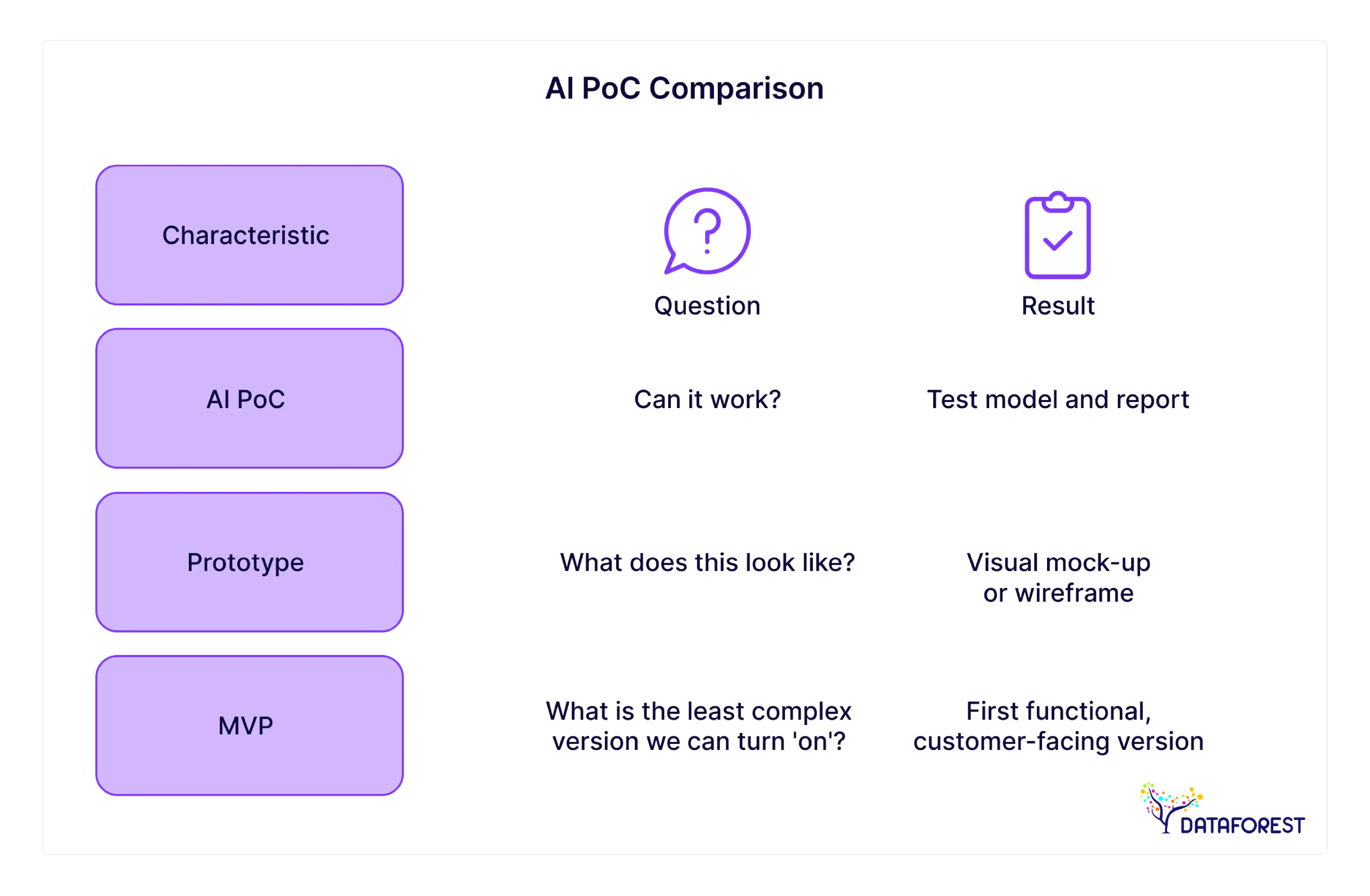

“Proof of Concept”, “Prototype” and “MVP” — Understanding the Terms for Stakeholders

Use this framework to ensure stakeholder alignment:

- PoC (Proof of Concept): “Can it work?” The result is a test model and a report.

- Prototype: Wants to know "What does this look like?" The output is a visual mock-up or wireframe of the interface.

- MVP (Minimum Viable Product): Poses the question, “What is the least complex version we can turn ‘on’?” This is the first functional, customer-facing version. Always start with the PoC.

Key Players and Functions in a Successful AI PoC

Who Owns the Implementation: IT, Innovation Office, Business Unit?

It’s a collaborative effort, but it must be led by the business.

- Business Unit (Owner): Owns the problem, its metrics (for example, reduction in cost), and will likely be a user of the solution who works closely with domain experts.

- IT (Enabler): Controls infrastructure, data access and security. Makes sure that PoC can be scaled one day.

- Innovation Office (Facilitator): Can act as a liaison between the business and IT and manage the project management process.

Why it is important to align CTO with Business Owners

Success hinges on this relationship. The business owner determines "what" (the goal) and "why" (the value). The CTO is responsible for “how” (the tech and data). If this alignment is missing from day 1, the project will either turn into an academic "science project" or a technical failure.

How to work with a Strategic AI Partner, and not hire developers

You are not only hiring coders; you’re bringing in expertise. An experienced AI PoC service provider such as DATAFOREST brings a proven playbook. They have navigated the common pitfalls and can guide you from initial concept to a proven model in weeks, not months. This helps prevent common mistakes and speeds your AI roadmap.

Defining the Right Business Case and Success Metrics

Business Question, Not a Technology Experiment

Your PoC should be built to solve a business problem.

- Weak: "Can we use a large language model?"

- Strong: "Will a generative AI poc reduce our customer support call-handling time by 30% by supplying agents with immediate summaries?"

Selecting KPIs that Show Real Value (Accuracy, Time to Decision, Cost Savings)

Your success validation metrics need to be tied to business value. Don't just measure "model accuracy." Favor those that are linked to cost efficiency or improved decision-making.

- Technical KPI: Model accuracy is 90%.

- Business KPI: 90% accuracy—we are able to process 9 out of your 10 invoices automatically. This level of model performance reduces manual work by 200 hours monthly.

Example of a Weak vs. Strong PoC Hypothesis

The Weak Hypothesis: “We wish to leverage AI to do better demand forecasting.”

This is ambiguous and does not have a defined "done" state.

Strong Hypothesis: “We believe that by training an AI model on top of our internal sales data and external weather forecast, we can predict demand for our top 50 SKUs with 15% greater accuracy than our current manual method. This shall be confirmed by a 4-week test."

This is measurable and time-bound.

Common Challenges and How to Overcome Them

Unrealistic Expectations from Business Teams

- Challenges: The business team wants a magical, 100% accurate solution.

- Mitigation: Set expectations early. Explain that AI is about probabilities and not certainties. We’re only trying to make a process better, not perfect.

Lack of data readiness or in house ownership

- Challenge: “The project kicks off and you realize the data is unreliable, spread around in silos across disparate data sources OR no one knows who ‘owns’ it.”

- Mitigation: Perform a 1-week Data Readiness Assessment prior to starting your PoC. This constitutes data collection process mapping, validation of data quality and initial data validation. A good partner can be of assistance in this regard. If you can't rely on the quality of your data, the PoC should probably start with a data engineering exercise.

Over-Engineering Instead of Rapid Prototyping

- Issue: Your tech team takes 12 weeks to train the “perfect” machine learning model, missing the point of rapid prototyping.

- Mitigation: Time-box the PoC. Expect 6-8 weeks at the most. We are aiming for a “Go/No-Go” decision, not a final production quality algorithm. A quick AI prototype is a generally more valuable asset than a complex and over-engineered AI algorithm.

How To NOT “Innovation Theater” — PoCs That Never Scale

- Challenge: The PoC is a “success,” everyone celebrates, but the project gets abandoned on a server somewhere because there’s no purpose, plan or budget for the next thing.

- Mitigation: Plan “Phase 2” before you commence with “Phase 1.” Organize an executive sponsor, the budget, and a clear path to production if the PoC succeeds.

When PoC is Over: Now What?

Criteria for Greenlighting Full-Scale Deployment

Finally, the PoC concludes with the decision “Go/No-Go” after a final model evaluation.

- Go: The PoC achieved its business KPIs, the tech is feasible and you will get a strong ROI.

- No-Go: The data was insufficient, or the model didn’t beat the existing process. This is also a success! Congratulations, you saved the company millions by failing fast and cheap.

Roadmap for Integrating PoC Insights into Existing Systems

And a “Go” decision is when the project moves from data science to full-scale software and data engineering. This includes automated data pipeline optimization, deployment of the final AI application on scalable cloud infrastructure, and the AI integration with your core systems (e.g. CRM or ERP). This is the beginning of the full AI implementation.

AI Adoption Governance and Change Management

This is the human side. You can’t just deploy a new tool at your team. You need a plan for:

- Governance: Who checks the AI model for bias or drift?

- Change Management: How will you train your team and redesign the way they work to utilize these new AI-powered insights?

Examples of AI PoCs That Have Worked

Finance: Invoice Automation Reducing Processing Times by 60%

One of DATAFOREST client in the finance sector automated work in its back office. A PoC demonstrated that an AI model — which harnesses the pattern-recognition strengths LLMs now offer for unstructured data — could parse invoices and justified a full-scale solution, resulting in 60% less manual labor. See the case study here.

Retail: Predicting Demand for Smarter Inventory Decisions

A forecasting PoC at a global Retail client proved that millions could be saved with an AI model. The scaled solution Optimized inventory and reducing stockouts, saving $142M. See the case study here.

Logistics: Ai that optimizes routing and enriches web data

You can use a PoC to test whether AI optimizes delivery routes. By combining internal data with external web data (traffic, weather) we can build a PoC demonstrating significant savings in fuels and time saved, justifying a full-scale deployment of an Advanced Planning system.

Selecting the Right AI Partner: The Must-Haves

When choosing who to work with AI PoC development services don’t just evaluate technical skills. Look for a strategic partner.

- Do they ask "Why?" before "How?"

- Do they have a transparent, time-boxed PoC framework?

- Can they provide you with AI PoC examples that successfully scaled to production?

The right partner, like the DATAFOREST team, is an extension of your team - focused on delivering business value, not just lines of code.

The Path Forward: From Idea to Impact

An AI PoC is the most practical, low-risk, and effective way to begin your AI journey. It cuts through the hype and points your resources where it really counts: delivering measurable business value. It’s more than a technical project; it is the cornerstone of your company’s future innovation strategy.

Ready to validate your next AI initiative and demonstrate its value?

Book a consultation with our AI strategists to scope your PoC.

Frequently Asked Questions (FAQ)

How long does an AI PoC take, and when can the leadership team start to see results?

A good AI PoC should be limited to 6–12 weeks. In general, the first “go/no-go” results for leadership are deliverable through a well-scoped project in the first 3-4 weeks.

What is the average budget that firms have to work with for a POC (AI), and where do costs tend to go up or down?

The budgets differ, but PoC is a fraction of a large scale project. Data complexity (how much cleaning and preparation is required?) and model complexity (is it a simple predictive model or complex generative ai poc?).

Can we run an AI PoC even when our data is not structured or siloed across departments?

Yes. This seems like a perfect use case for a PoC. The idea is to take a small sample of that messy, siloed data and show that you can derive value. DATAFOREST has a few case studies (like data parsing) that relate directly to this challenge.

Should an AI PoC be developed in-house vs. by a technology partner, and what return on investment advantage does one choice imply over the other?

An in-house team develops long-term skills but it could be slower. An external partner (such as a specialized AI PoC development firm) comes with the knowledge and proven process to accelerating the timeline and reducing execution risk. This speed-to-value translates into a much faster time to ROI for the PoC itself.

How can we avoid AI PoCs turning into innovation theater that doesn’t make it to production?

You prevent it by starting with a business stakeholder, not an IT-only project. You need an executive sponsor who “owns” the business problem and has the budget and intent to scale whatever solution is successful from the PoC.

Do we need an end-to-end data platform setup ready in place before beginning out with a AI POC or can we do it tiered?

No, you do not. Proof of concept should be executed running in a lightweight, sandboxed environment. It’s the success of the PoC that justifies the investment in a full data platform moving forward, not vice versa.

What are some other good AI proof of concept examples?

- Healthcare: AI to find patterns in patient data, as explored in AI in Healthcare.

- Sales: An AI voice agent for cold calling and pre-qualifying leads.

- Finance: A Custom Agentic AI for Financial Advisors that can automate portfolio summaries.

.svg)

.webp)